Deploying a TrueNAS Backup Server to my hot Texas Garage

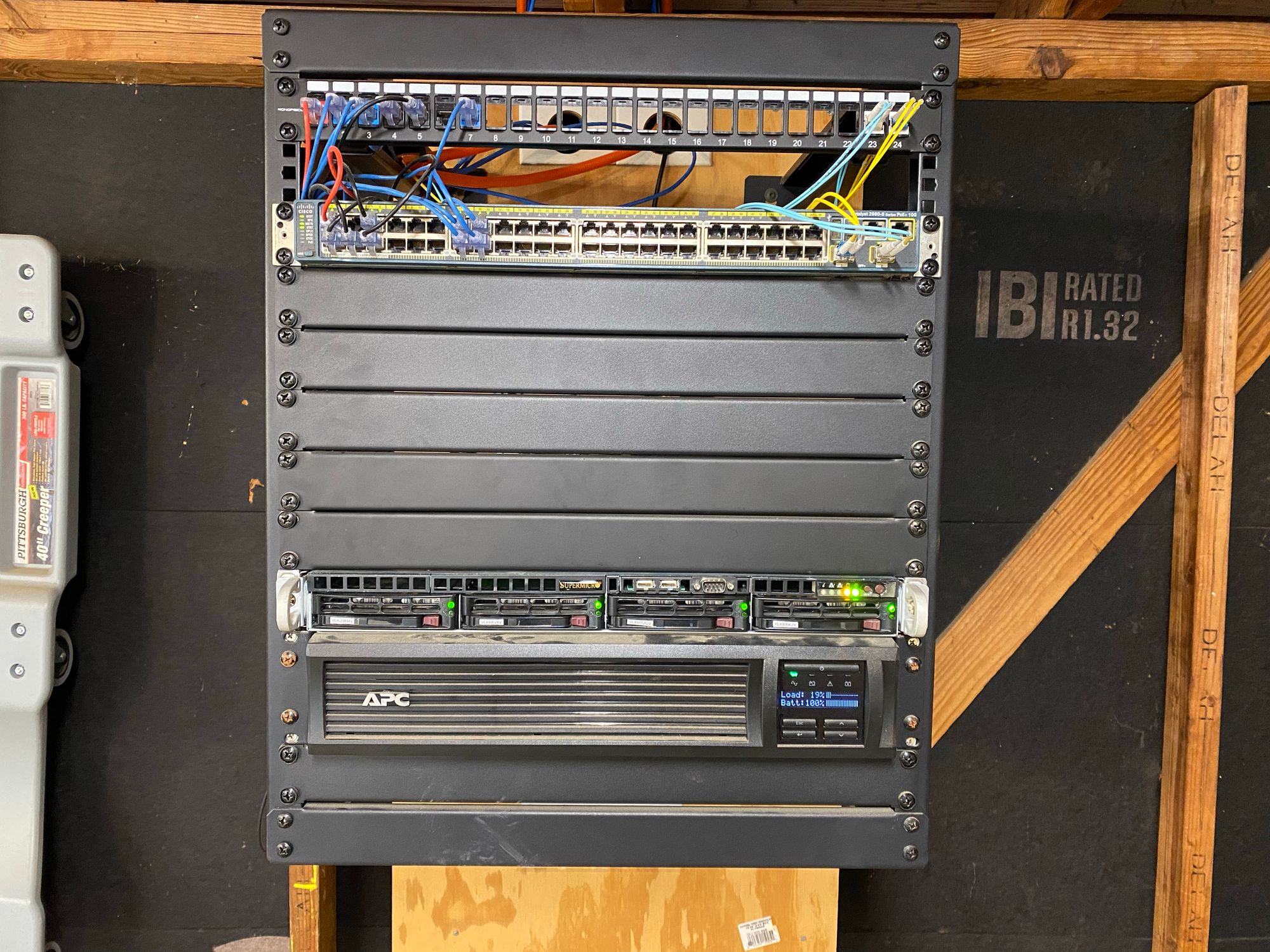

If you've read my older posts, you know I have a rack in my unconditioned detached garage with a Cisco switch, an APC UPS, a couple of Raspberry Pi's and some other misc items. Everyone told me it would all fail because it will get too hot in summer, too cold in winter and there will be too much dust. But, I did it anyway because no one can actually backup those claims with real world experience.

So far we've been through record breaking cold, record breaking heat and what actually happened is that everything is fine, and I've not had a single problem. Passivly cooled Raspberry Pi's have no problem, the switch doesn't even have the fans ramped up, and the UPS just sits there doing its thing.

So, I decided to deploy a backup server to the garage. Having a TrueNAS Server for backups in my detached garage has a lot of benefits.

The idea here is to replicate my backups to this NAS as its physically about 150ft away from my main rack and in a different building. Networking to the garage is fiber, so lightning can't come through an AP or Camera outside and into the same switch and nuke it, and its quite well separated electrically too being in a different building on a separate UPS, and much closer to ground. Before this my replicated data and long term local backups (not my only backups!) are in the same rack as the original data, which has problems:

- Roof leak over the rack could damage both original and backups

- 80ft Water Oak could smash into the house, and crush both

- Physical theft, if they steal the rack in the house, its not likely they steal all the junk in my garage rack too. Or at least less lickely

- Lightning strike through a switch could kill both

- UPS failing could nuke both

There is a LOT of benefit to replicating backups to a different location on your property, even if it is in a more harsh environment. The odds of losing this entire array is low, and even if I do, the snapshots can be re-replicated as they are stored for the full retention in the main NAS as well as secondary. The odds of me losing my primary array AND this, are extremely slim. The entire purpose of this copy is to prevent physical damage of the data.

It also moves heat outside the house, which is a plus. And noise is not a concern.

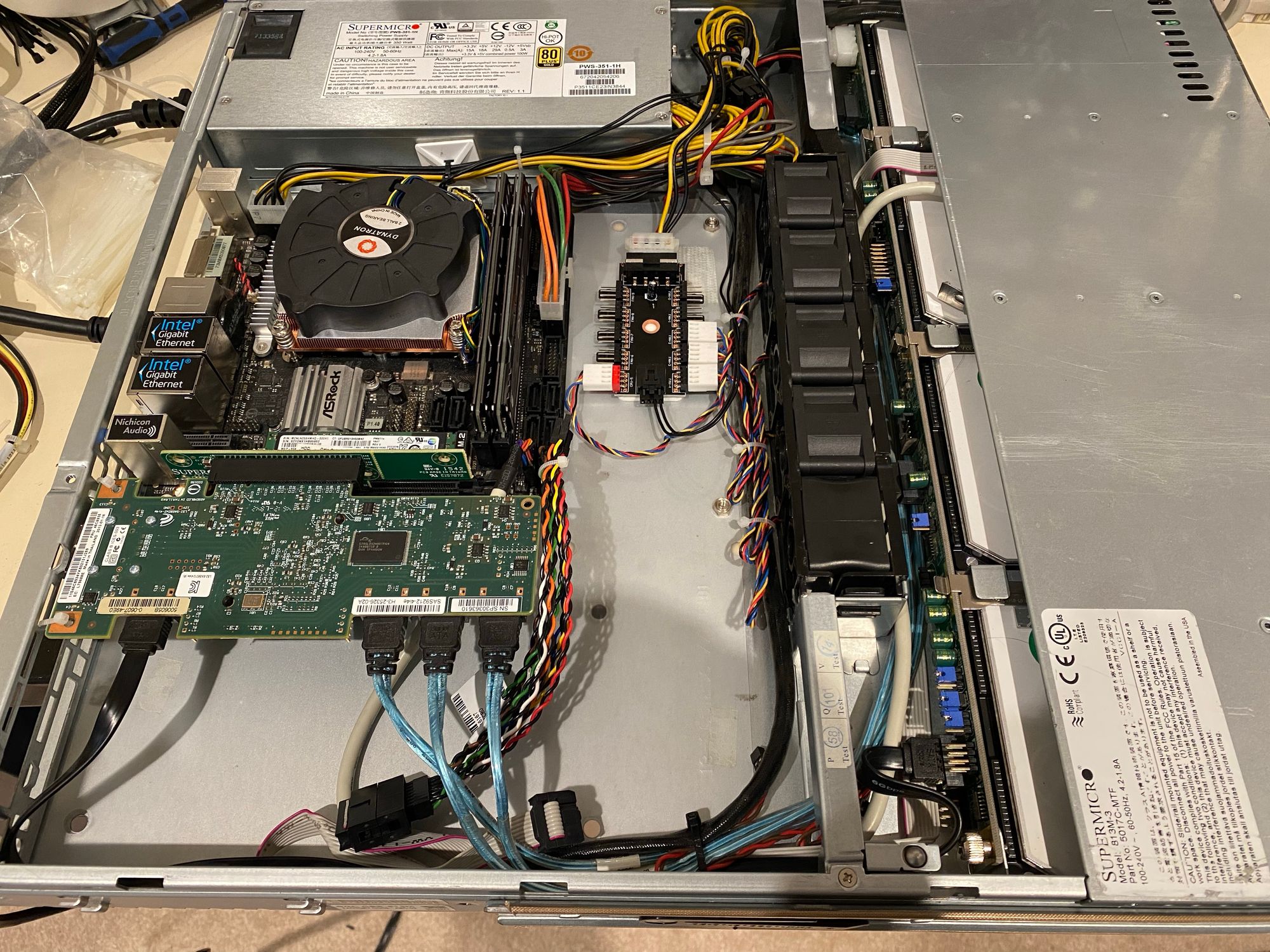

For the hardware, it was all free apart from a fan controller and a CPU heatsink. I had all the parts including the drives laying around

Here are the specs:

- Intel Pentium G4560

- 2 x 4GB DDR4 DIMMS (non-ecc)

- ASRock H270M-ITX Motherboard (Dual Intel NIC!)

- SAMSUNG SSD PM871a M.2 SATA 256G (TrueNAS Boot Drive)

- Supermicro SYS-5017C-M Chassis

- Supermicro 350w 80+ Gold PSU

- 4 x 40mm Supermicro Fans

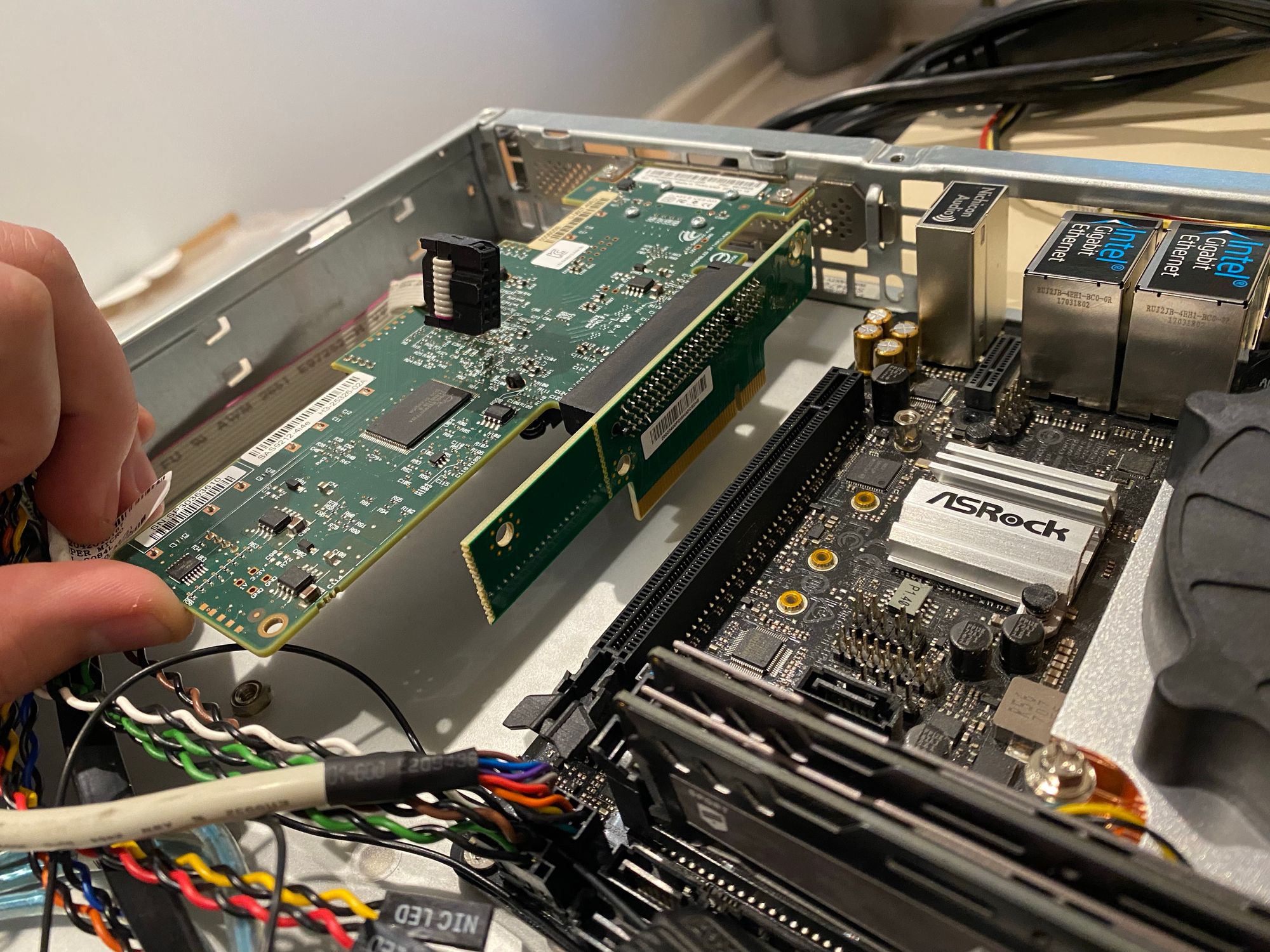

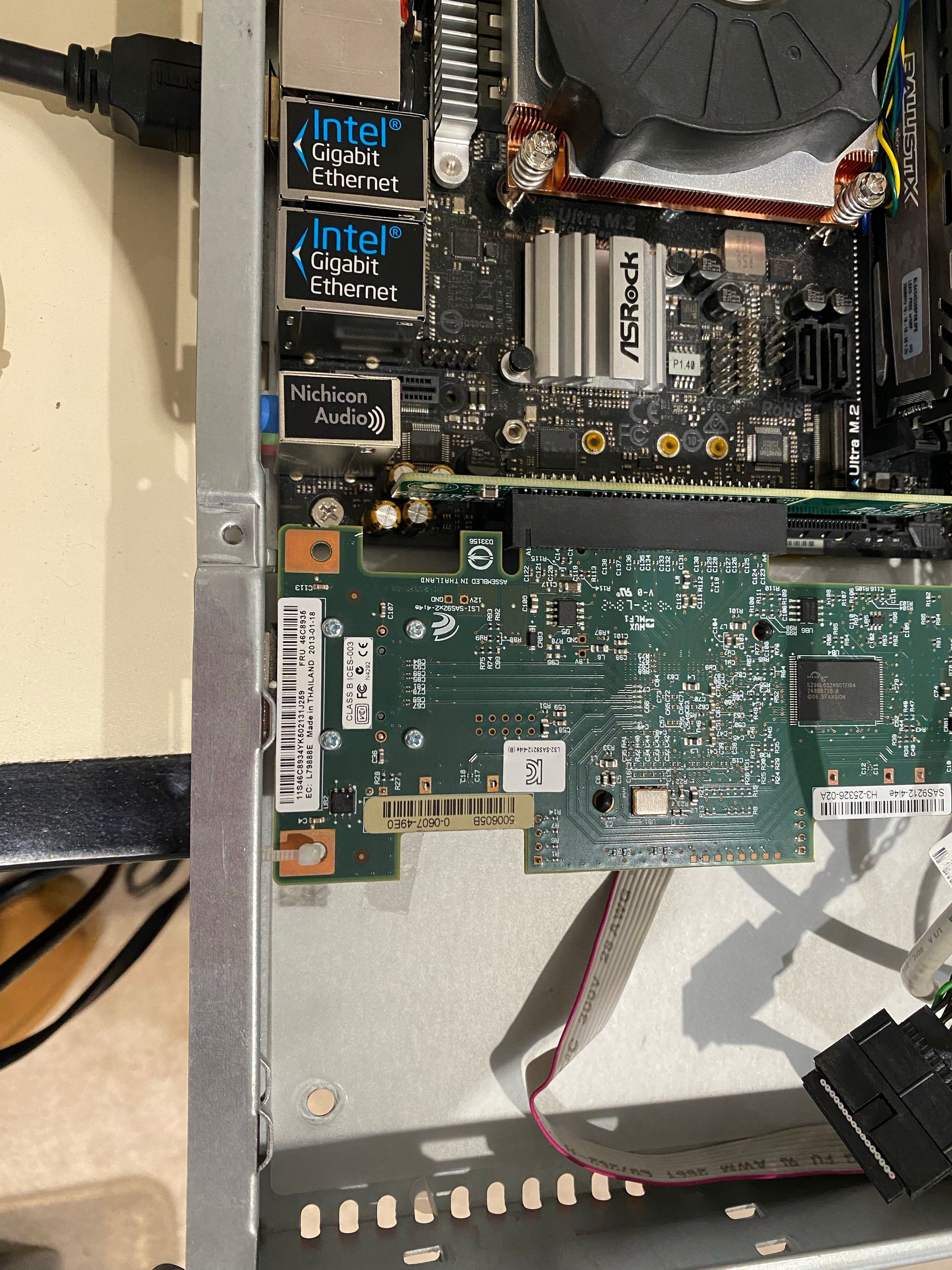

- LSI 9212-4i4e Flashed to IT Mode

- ZRM&E 4Pin 12V PWM Fan Hub Speed Controller

- Dynatron K199 CPU Cooler

- 4 x 8TB SAS Disks I had laying around

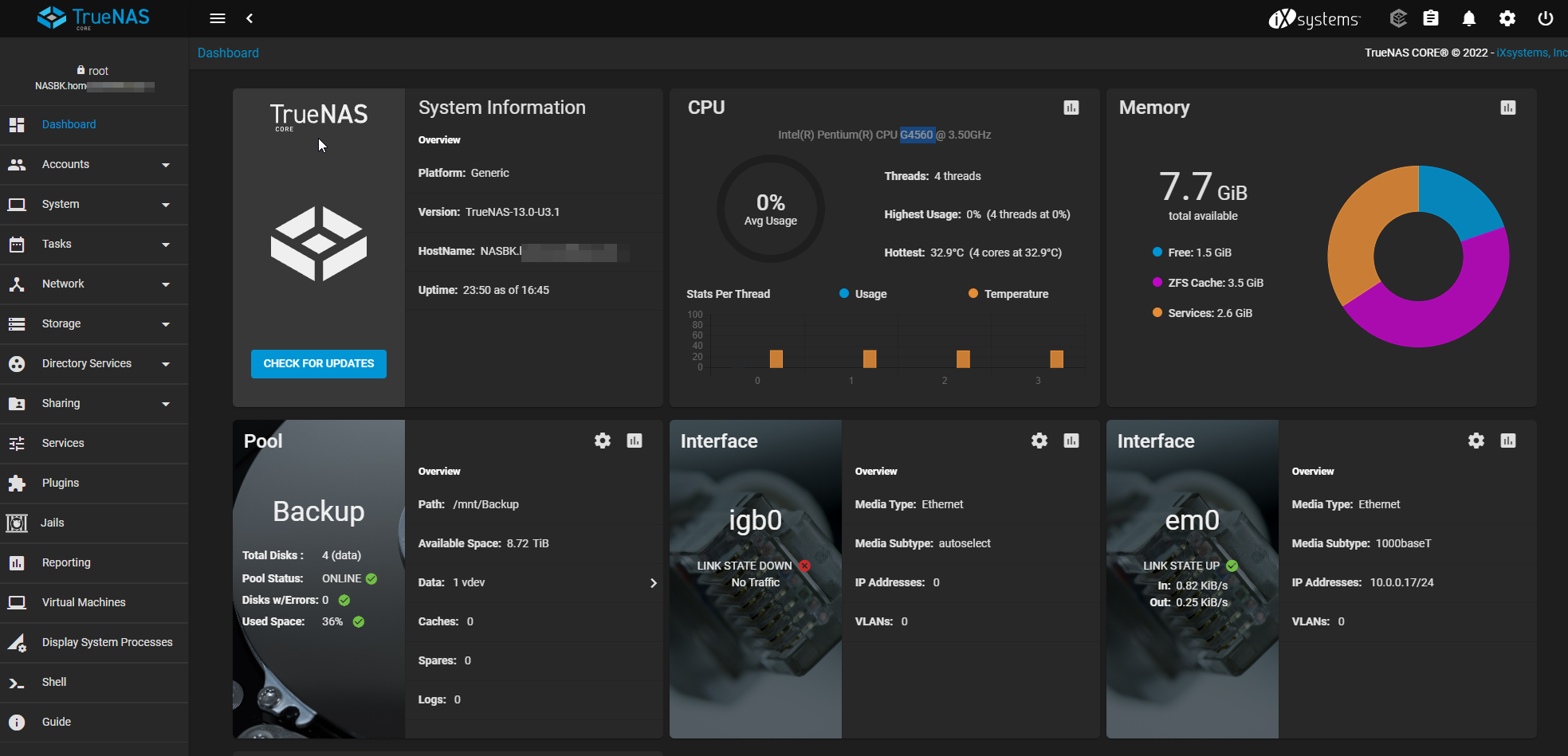

First thing you might notice is that I'm going to be using just 8GB of RAM with TrueNAS which is apparently a big no-no. I really don't buy it. I don't see why you can't just use 8GB of RAM, so I took my secondary NAS on my ESXi server which is a VM, and lowered it from 64GB of RAM to 8GB. I could not notice a single change in performance in the week that it had 8GB of RAM, and I ended up just leaving it like that.

Just like when people told me all my hardware would die in the hot garage, everyone is wrong. Don't believe people until you try it for yourself!

You'll also notice a lot of this is consumer hardware, so I did run into some issues. First is that the motherboard expects a cutout behind the CPU, and the Supermicro chassis does not have that. So the bottom of the board actually touches the chassis. Supermicro does include a plastic shield under the board, but I was worried. So I coated the entire bottom of the baord in Kapton tape. If you don't have Kapton tape, get some! Its great for doing weird projects.

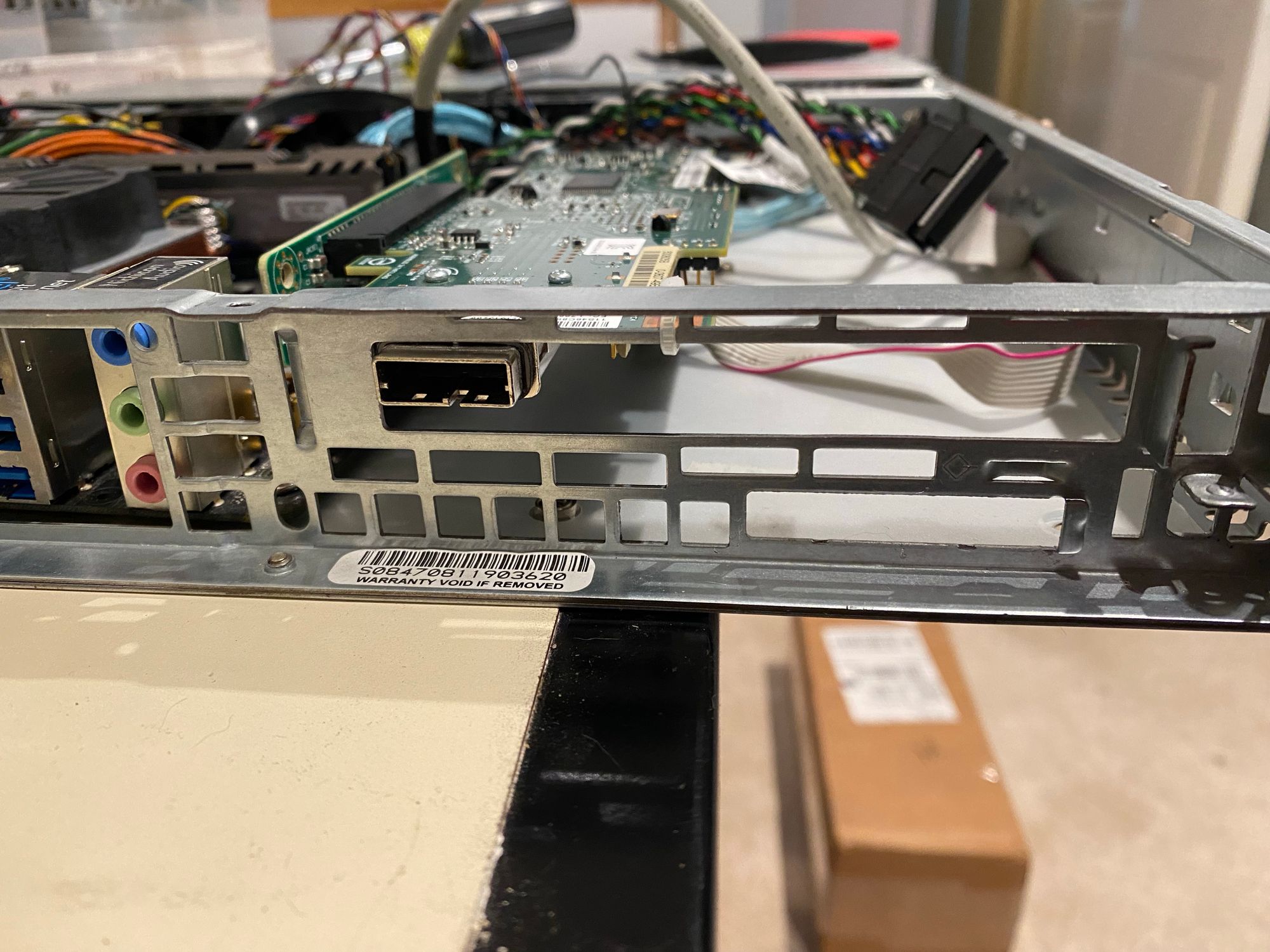

The next issue is that the single PCIE slot didn't line up with where the riser needed to be to get a card in this chassis

Luckily, I was able to take the IO plate off the card and just zip tie it in place. That's fine, right?

As you can see, its a perfect fit!

This motherboard only has 2 fan headers, one for the CPU fan and one more. I'm not sure why, because usually ITX builds need more than 1 fan in total, but I was able to get around this with the above mentioned PWM fan controller. I was skepticle, but this item is FANTASTIC and I would get it again. It was $10, and is able to control all fans via PWM, and even feed back data from one fan to the board

You can see it here in this picture of the inside of the case. I think I was able to do an okay job at getting it look neat

Since I now have full control over the fans, I set a quite aggressive fan curve, since I'll never hear it anyway.

You masy wonder why I am not using the on board SATA controller and instead using an LSI HBA, the reason is that the drives I have on hand are SAS and will not work with a SATA controller. Generally the onboard controllers are not good anyway, and its recommended you use a better storage controller.

Here is a shot of it in the rack. The rack is not deep enough for the rails, so I just set it on top of the UPS

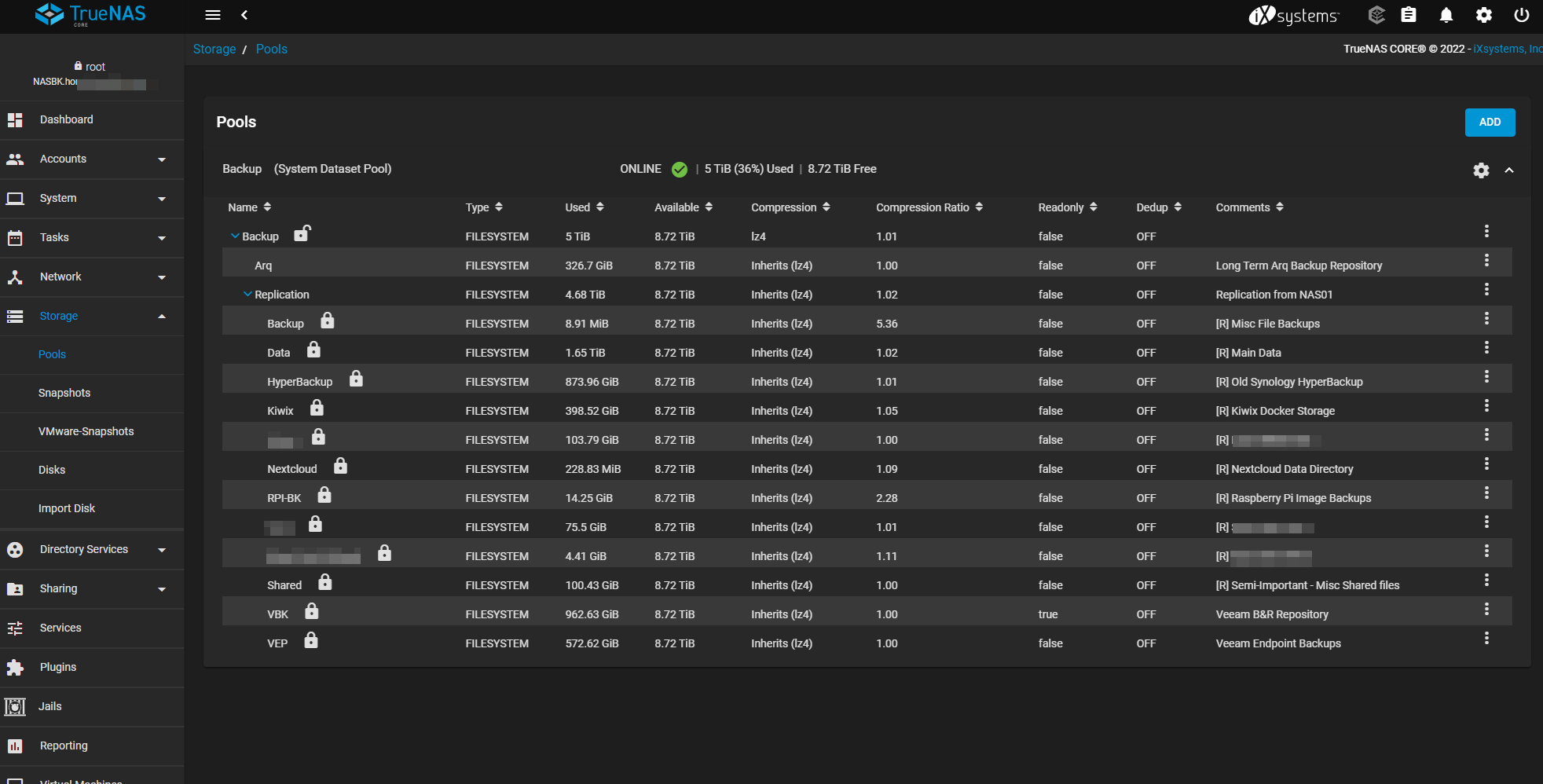

For the pool setup, I decided on RAIDZ2 as it means I could lose any 2 drives. I did think about a striped mirror, but if I have bad luck and the wrong second drive in the mirror goes, I lose the arrray. Performance would be better, but performance is not a concern here.

I got everything setup, and so far its been working great. Zero problems and performance is fine

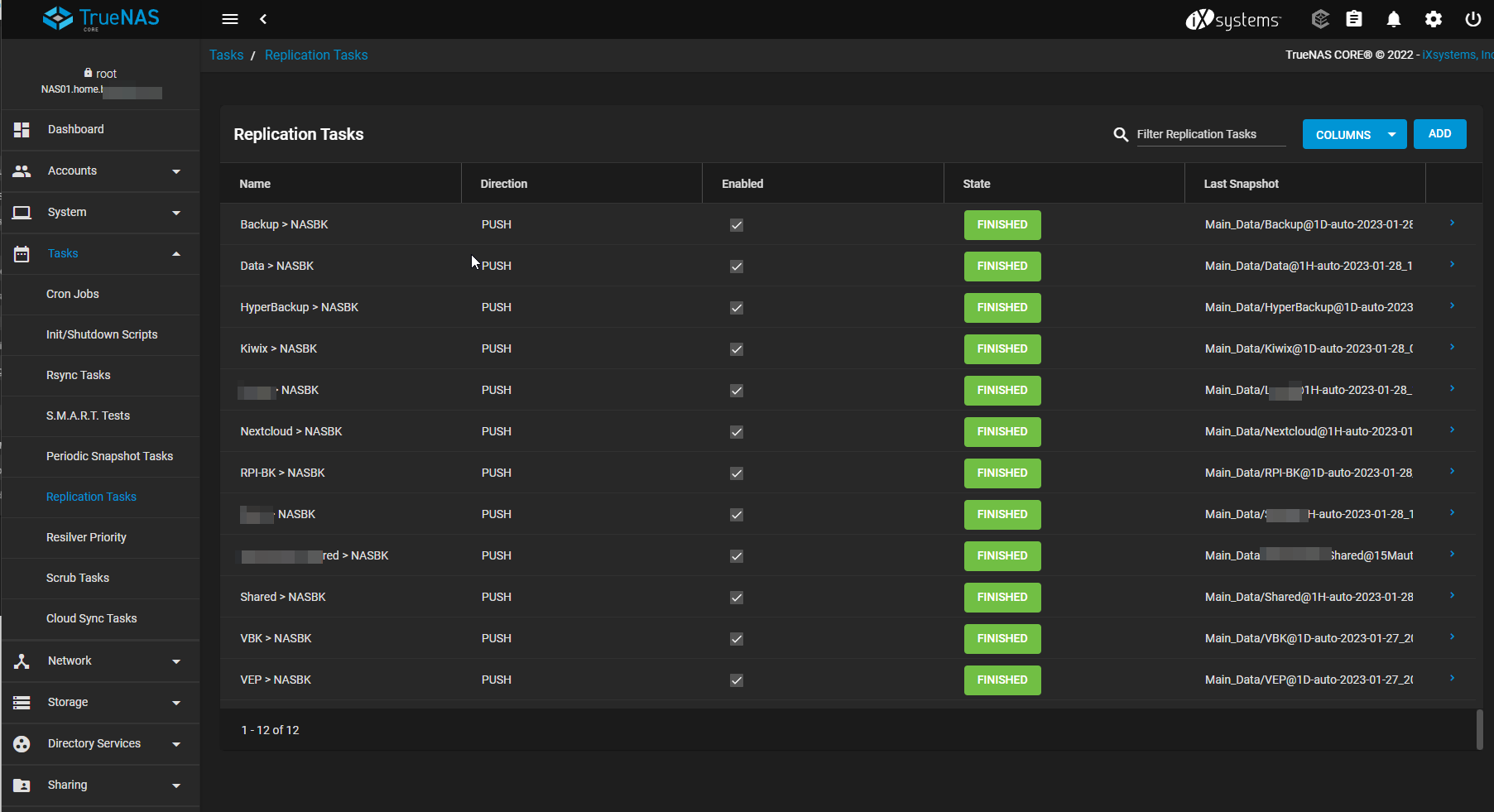

Here you can see all my replicated data

Because this data is replicated, it does not matter if all the drives die. I can just replace them, and re-replicate all the snapshots, and I will be made whole again.

I'm doing replication every 15 mins from my main NAS. Here is the replicated list on the main NAS

Replicating data to a second NAS has 2 benifits. First is that it turns snapshots into backups with not much work. Now my main NAS can completely fail, and I retail all historical changes. The second benefit is that if your main NAS goes down, you can just create an SMB share or whatever you need on the second NAS and get access to your data.

Because of this, it means pretty much anything can happen to my NAS, and at most i'll be 15 mins behind. If you really wanted to, you could make the replication more frequent.

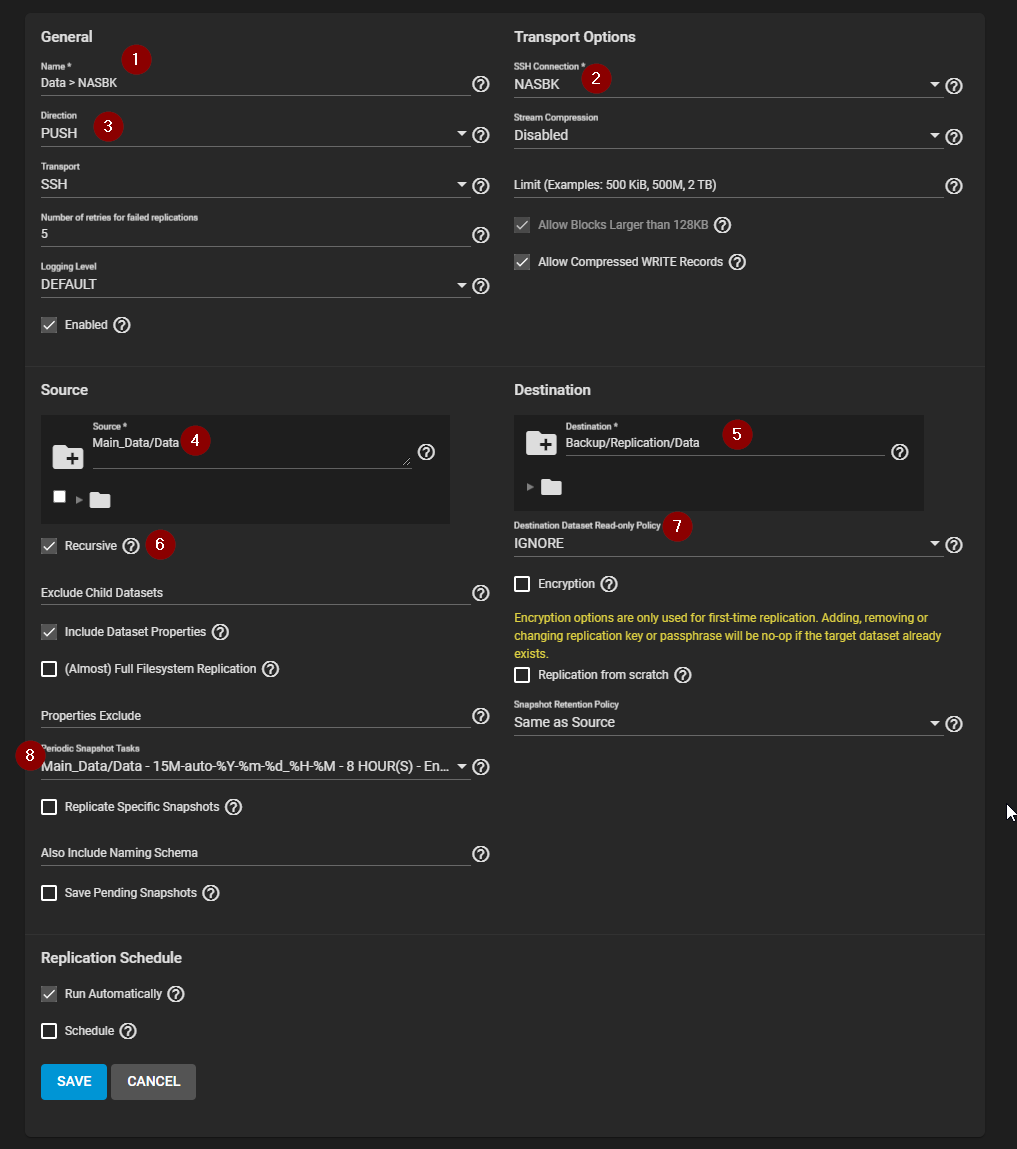

Here is my replication config if you want to steal it. I've put numbers next to everything I've changed.

If you don't select recursive, for some reason the snapshots don't expire as expected on the backups side the same as the source. And the read-only policy is so that in the event of a main NAS failure, I can use the data on the remote side.

That's about it! Soon I will post what changes lead to making me deploy this server, and I will also post an update in the future for any observations.