My Backup Plan & Lessons learned

I've posted about my backup plan before, but a lot of things have changed, and I have learned a lot about what works, and what doesn't work for me. Skip to the end of the post if you just want to see my backup plan

Lessons learned

Over the past 5 or 6 years I have really refined my backup plan, and I have identified multiple problems with my plan. In no real order, here is the negatives

Being too cheap:

The main problems with my setup is that despite spending thousands of dollars per year on IT products, I am hesitant to actually spend money on software or services. This is really stupid, as not doing so means your backups simply don't work, or you spend way too much time troubleshooting your backups. This isn't too bad when you don't have much to do, but I found after buying a house I am very, very busy. This means when backups fail, it might be a week before I even get to look at them, this is clearly a problem. Here is where I didn't spend money and how it hurt me. These actually might fit other categories for you, however for me it was because I was too cheap

Storage not fit for purpose

I already had an Office365 Subscription, so I had a ton of OneDrive for Business storage, and a ton of Sharepoint Cloud storage. Because I was too cheap to spend the $3 or $4 per month for real storage (Such as B2, Wasabi, S3, Azure) I opted to try and use the Office 365 storage for backups using programs like Stablebit CloudDrive and Arq. This worked fine at first, but then I would run into a lot of problems which was the result of the hundreds of thousands of files the applications made, and also problems related to using the API to access the storage in really a way it wasn't meant to be used. This meant that over the years I had to completely delete my backup set, and start over. I couldn't contact Microsoft for support as this was a completely unsupported way to use the storage

Since realizing this issue I have moved to a combination of Backblaze B2 and Wasabi, and I have not had a single problem.

Software Piracy

We've all been there, or at least I think we have. You see some software for $39.99, but you can also find a crack for it online... awesome. Save the $40 and get it for free. I've done this a few times for software, and each and every time it has come back to bite me in the sense than now my backups are useless once the application finally realizes its being pirated. Your only options are to either purchase the software, or completely move off the platform. This is a giant waste of time, and you get NO SUPPORT! In hindsight, I should have just payed for the software I needed instead of pirating it, or pirating alternative software, or substituting free software that didn't do the job I needed.

Updating software too soon:

I have been bitten by this so many times, yet I keep doing it. Because I am interested in technology, when the new software comes out I will update it with no testing, and no real thought. This has caught me out in multiple ways

OS Upgrades

Multiple times I have upgraded my OS, Weather it be Windows Server, Debian or VMware ESXi. I've updated to Windows Server 2019 only to find that Veeam or my other backup software does not support it, resulting in a giant mess of downgrading (Why didn't I just read the Veeam supported notes?). I've updated ESXi only to find that Veeam didn't support that, resulting in having no VM backups until Veeam released an update, and I have updated Debian only to find that the tools I were using are now not supported

All of these could have been avoided if I just wanted for the OS to mature, or to just read the damn notes!

Incomplete or buggy Software

This was a real problem for me just recently. I have been using Arq 5 for as long as I can remember, and its worked great. When Arq 6 got announced I bough the upgrade right away on launch and upgraded my system. After all, it says it was compatible. Well, it wasn't. It completely wrecked my 4TB cloud backup set and was plagued with problems. (To the point many people jumped ship!) Eventually they completely removed the backwards compatibility with Arq 5 and had to scramble to fix hundreds of bugs. It was clear this software was completely untested, yet I upgraded without a thought. In the end I downgraded back to Arq 5 and had to upload another 4TB of data

Over complicated setups:

I have been guilty of this too. I want a specific design, and I will refuse to believe its simply not possible or not a good idea, so I will shoehorn 5 different tools and applications together to make my solution, only for it to flat out not work, or come crashing down later on, or worse, failing when you need to restore data

Unsupported solutions and configurations

At one point I was using StableBit Drivepool together with Stablebit CloudDrive to form a storage pool backed by OneDrive and Sharepoint, and using Robocopy to move the data. When I had a problem with this, who do I turn to? I troubleshoot Robocopy only to find its doing exactly what it should, I contact Microsoft who just laughs at what I am trying to do, and I contact Stablebit who confirms their software is working as intended. The solution I had made was completely unsupported, not only for getting help, but just for working at all. Weeks were sunk into this, only for it to be a waste of time eventually.

Too many moving pieces

Not only was this an unsupported use case for many of the tools I was using, but it was just relying on too many moving pieces. Any one of those fails and the whole thing comes crashing down. Stick to a basic, supported solution or configuration and you will do much better. Even if it doesn't fit your needs, you should just move on to a different solution or accept that your needs are unrealistic and cannot be met.

Too many changes, too quick

In some of these more complicated setups I would make changes almost daily to try and improve the setup or fix little problems, this meant I never really knew the long term reliability of the solution, and often times the backups were being re-uploaded all too often which meant that historic data was being lost. The most basic backup solution I had setup is also the first one, Synology HyperBackup. I have since grown to love this tool as its very simply, and it just works. Its been chugging along in the back for years, and I can still restore a file from my very first backup. Keeping things simple again has come out ahead

Relying on unsustainable tools or services:

This has caught me out more times than all of the other problems combined, I will set something up which works great, but its just a ticking time bomb

"Unlimited" storage

So far I have been caught out by this three times, and I can say I will never be caught out again. If a deal is too good to be true, it probably is. If any company sells you "Unlimited" storage for a flat fee, its just a scam. There is no way they can fulfill that promise, so they are lying to you.

First I used OneDrive's unlimited storage. I didn't have a sophisticated plan in place so I manually uploaded files using my slow connection. After wasting a ton of time doing this, they killed the unlimited storage...

So I moved to CrashPlan Home which was "unlimited" for $5. The initial problem is that the client would use more and more RAM the more storage you used. I personally believe this was on purpose to discourage large backup sets. I actually ended up giving CrashPlan its very own VM with 32GB of RAM just to backup my data. While this worked, it eventually was pointless anyway as CrashPlan decided that their home service was unsustainable and killed it off. This meant you had to download all your data fast (Which was not possible because of the slow speed of the service), lose it, or migrate it to CrashPlan Pro. Even migrating to CrashPlan Pro, there was a limit to the backup size during the migration. This caused many people to lose their backups. The months it took for my initial backup was wasted

But I didn't listen to myself, and I moved to CrashPlan Pro. This was double the cost at $10, so still too good to be true. It worked okay for a little while, until they announced they would stop backing up certain file types, and remove them from existing backups... uh oh. While the file types listed didn't affect me, it made me realize they were starting to feel the pain again of unlimited storage, and at any time they could add more files types and just kill your backups. So I moved off this, again wasting the time I had spent uploading all my data yet again

Next up I saw Amazon Cloud Drive. This was $60 a year for unlimited storage. Awesome! So I re-uploaded another 15TB of data which on my then slow connection took forever. Once it was up there it was alright, but I did run into a few issues resulting from using the API. (See the earlier point of "Storage not fit for purpose") For a while it was going great, and because it was unlimited I just backed everything up, forever. I had over 40TB of encrypted data in my drive which I'm sure caused them some pain. Being encrypted they can't de-dupe any of it, and there is no way you can turn a profit from selling 40TB of hot storage for $60 a year. Eventually that came, and they bumped all the accounts down to 1TB and slowly removed your data...

After this I pruned my backup set down and tried to use OneDrive again, but in the end the solution was to use a pay-as-you-go type storage provider like B2. It truly is unlimited, but you will pay more as you add data. They can never decide to just reduce the amount of storage available, as I pay for it.

I'm sure a lot of you are using G-Suite, and I can 100% guarantee the gravy train will end soon enough. They are already implementing API throttling and the 750GB per day limit, and I'm sure more is to come

NFR Software / Free Version of paid software

So far this has not yet caught me out, but it got close. I am very aware of how my backups could derail if any changes are made

I currently use Veeam Backup and Replication via their NFR key. I backup all my VM's using Veeam and I use their replication as well. Last year they changed from a Socket based licence to an instance based licence. I was using 18 "instances" according to their new model, but they now only gave you 10...

I tried pairing down my backups, but without merging systems together or doing some hackery, there was no way I could proceed. Luckily they increased the instance count on the licence to 20 which solved my issue, but I am well aware how close I came to having my VM backups halted.

This goes for any free version of paid software. Just like many other services such as Dropbox and Evernote, eventually the company will pull features from the free software to save costs or to get users to move to the paid version.

Datacenter Colocation

A lot of people, myself included think its very cool to have space in a rack at a datacenter. Its very secure, the power is stable and you don't need to worry about HVAC. I have a post about my datacenter colo server which you should check out if you haven't already

In two cases using using colocation has been a problem for either me or someone I knew, all because of cost. Even if you do get a good deal, I think you should keep in mind if this is a sustainable deal, and if the price increases if you are willing to pay. For personal use, a colo is almost always too expensive I think

A friend got a fantastic deal on a 3 year contract for 2u of rack space for just $60/mo with a 1G symmetical line with 35TB per month of bandwidth. He moved more and more services out there, and had all of his backups and replication going out there. Everything was great!... Until the contract ended and they then bumped the price up to over 5x what we he was paying before. Now he had to rush to get his hardware back from another state which was a huge hassle, and all of his backups stopped while this was happening. He then had to find replacements for extremely sophisticated setups he took for granted.

I had a similar problem in that I had 1u of rack space for free. But it was through my employer which comes with a similar set of problems. Eventually I found a better job opportunity which meant that I had to rip out all of my equipment and try and replace the functions of a full ESXi setup I had in "the cloud", thats a tough task

Expensive enterprise hardware you got for cheap

We all love a good deal on eBay, but if you are going to use whatever you bought for backups that need to run no matter what, make sure you can actually support that equipment and fix it if it breaks. Recently I got a killer deal on a tape library. I paid around $200 for over $4000 worth of equipment, awesome!

I knew this was unsustainable hardware so I never used it for anything critical, so I just backed up media with it. Well a few weeks ago one of my tape drives started to have problems. If I was using this for my important backups and only had 1 drive, I would be forced to spend around $2000 on a replacement tape drive (Which lets face it, I won't do). Make sure the equipment you are using can be in service for a reasonable about of time even if it breaks, you don't want to be switching backup solutions every few months or you lose your historic data without doing a lot of extra work

The other thing to think about with tapes, is what happens if someone steals your tape library along with the rest of your lab? Well now you need that tape drive to get your data back! Good luck.

Unreliable physical storage locations

I never thought this would be an issue, but part of my off-site/off-line backups were keeping a storage drive in my desk at work. This was great, and how can it fail? Well now with COVID19 I work from home...

Now my off-site backups are on-site. Luckily I have other off-site backups, but if this was my only one, all my data would now be on-site, instead of off-site!

To fix this I am looking into making secure storage elsewhere on my property, or using something like a safety deposit box or storage unit that I should be able to access no matter what

Not enough resources (Money & Internet)

By resources I don't mean hardware, because even guys with no spare income manage to acquire a full rack of servers and a 100TB NAS at home, somehow.

The issue comes when you now want to back up a large amount of data, but just don't have the resources. Backing up 30TB of data is expensive. You can't just expect to backup all that while complaining about spending $10 a month, sure you can probably find a home for the data, but I'd bet a millions dollars its not going to last. I think people need to be realistic when sizing their setups in the first place, and really think if they can sustain what they are trying to do.

The other side of the coin is internet. You might have all the money in the world, but if your pipe at home is too small, you are not going to be able to do cloud backups. I had 15TB of data I wanted to backup with a 35Mb/s upload speed. I thought it would be easy, but it wasn't. The initial backup takes too long, and all too often did I realize I had to run the backups literally all night to keep up. This means any extra data I never accounted for would run the backups into the day, and eventually just cause me to have my upload maxed out 24/7. If you have a large data set and a low upload speed, you may want to just trim down what you send to the cloud and find another solution

The general take away with this point is that you really will have to spend money, and have the right resources available to back up large amounts of data. I think in general a lot of people are trying to back up too much data. Perhaps think about safely storing the 1-2TB of important files and ignoring the 20TB of movies, as once you lump that 20TB of movies into the backup set it makes those important files a lot worse off from a backup standpoint, as you end up using worse and worse solutions to get that 20TB up there

Other general bad ideas:

Here are a few more points I couldn't fit into the above topics

Not enough hardware diversity

With virtualization its super easy to merge all of your servers into a single server. It uses less power, its cheaper, it makes for better networking. But then you see people having a backup VM on the same host as their main data. Wait a second...

What happens when your host gets taken about by a power surge? Now your backups are gone too! Even if this is just local backups which wouldn't protect against theft or fire, there are plenty of things that can take down both your backups and your main data in one go. I've even seen people store their backups and production data on the same storage array, crazy! you are 2 drive fails away from losing everything

Taking other peoples opinion as fact

I've done this all too often, someone who is clearly an expert on the topic tells you a solution is great, or what you are using is trash.

I've had this multiple times with Synology HyperBackup. People will say its horrible and unreliable, and they are seemingly experts in the IT space. So far my experience has been the exact opposite, of all the tools I have used its the one that has lasted the longest and just keeps working

I've also had people tell me certain tools and near perfect such as Duplicati, but when I ran it I had nothing but problems. So its worth noting that your experience may drastically differ from everyone else. If you make a post and 10 people respond telling you to use some software, there is still a pretty good chance the software might be terrible, or just not work for you. Like with Duplicati, I'm not saying its bad software, but it just never worked for me. I wasn't able to use it long enough to even form a real opinion. Reddit is a huge echo chamber most of the time, so whatever is the latest trend often gets hailed as the best ever solution, and the more basic tried and true options seem to get ignored

Not testing backups

This one is pretty basic, but I think a lot of people skip it even when they know they should be doing it. I've done it, I have just assumed everything was working great. I did this when I was using a basic Robocopy setup with Stablebit CloudDrive and OneDrive, I went to restore a 1GB file that I had genuinely lost, only to find out I got a ton of read errors and after a full night of waiting for the file to download, it failed every time. Luckily I had another backup, but a solution that on paper should have worked, didn't.

Not thinking long-term

I never even thought about a situation where I need to restore a family photo 10 years down the line, but it might happen. At some point I realized that if a photo gets corrupted, it would be many years until I saw the problem, and my backups would be useless as that version will have been rotated out years ago

If you are storing very valuable data, conciser what will happen if you don't immediately notice data loss or corruption. Every single time I have restored a file it has been because the file was messed up in some way, or that I couldn't find it, but I was so sure I knew where it was 8 months ago.

I feel like we all focus too much on total system failure, when thats actually a pretty rare occurrence with todays great hardware. For this reason I started doing a yearly picture backup to M-DISC for long term retention, I have another post on that.

Not having good data storage for the original copy

Lots of people will spend endless time perfecting their backup plan, only for their main data to be stored in a horrible way prone to data loss or corruption. Perhaps spend a little more time getting your main storage to be reliable so you never have to restore from backups. My Synology NAS is setup in a way that it would be very hard to actually end up with corrupted and lost data, and because of that its very rare I need my backups, if at all

Your goal should't be to easily restore from backups, it should really be to never lose the data in the first place

Not realizing what a backup is

RAID is not a backup, as if you delete a file, its gone. Snapshots are not backups, as if the array fails, the data is gone. Data synchronization isn't a backup, as the lost data is then deleted on the other end.

If a tool you are using for backup has "Sync" in the name, thing long and hard about how well it will work. Most likely it has not been developed for backup, and the features will be sub-par, or just not work. A lot of people use Synology CloudSync to get data to B2 and then use retention settings in B2 to keep deleted data. I tried this, and it was one of the worse experiences I had. There was no UI designed for restoring deleted data, and CloudSync had no idea when I restored a file in B2, worse, there was no real backup retention and thinning options to speak of. If you are relying on something like this, I would personally move off and use another solution

In the same way, storing your data in the cloud isn't a backup in itself, you can very easily suffer from data loss in the cloud, and the provider will probably tell you to pound sand. Sure you can easily recover from a deleted file, but what about when their setup breaks and loses your data? You are finished then.

Positives

All the above has been negatives, so here are the positives. They are essentially just doing the opposite of above but there are a few that stand out

Following 3-2-1 and have multiple backups

This has saved me many times, because I have so many different diverse backups now, even if one fails it doesn't leave me without protection. Never will I be on vacation and get an alert for a failed backup and be uneasy the whole trip, I know that if any one, or even two of my backups fail, my data is still secure

Going Basic

As I mentioned above, the most basic tools are the ones that will last. Consider giving up some features you wanted, or wasting a little more storage than you needed to stick with a more basic solution that will just keep on working forever. My least favorite backup tool HyperBackup has become my favorite tool over the years, and all of the complicated tools end up failing me and being replaced

Spending good money on storage

I reluctantly spent almost $1000 on an 8 Bay NAS with an Intel Atom in it. Performance isn't great either, but it works. It just works day after day, never has a problem and the updates are all validated, and when things go wrong, I have support. I have been so happy with my Synology NAS I would buy another with no hesitation

I don't have to rely on a toxic forum *Cough, FreeNAS* or a subreddit full of people who don't know the product very well. I call Synology who has a support engineer who can actually fix the problem

Next up how I store my data, and then what I do to keep it safe, and finally backups

Data Storage and Protection

All of my data is stored on my Synology DS1817+ which is configured in SHR2, which lets me lose 2 drives and still have the array working, only on the third failure would I lose data. I am also running BTRFS which does check-summing of data which should protect against bit rot and other corruption.

Then, I do snapshots. I take snapshots every 15 minutes, which then get consolidated into 24 hourly, and then 2 daily snapshots. This lets me instantly recover data that has been lost or changed in an instant, and it doesn't really use much extra space at all. This also covers data that isn't backed up, so its very nice to have. The snapshots are only accessible by an admin account on the Synology which has 2FA and restrictive firewall rules to get to the UI. So a ransomware attack could not remove this snapshots

I also enable the shared folder recycle bin, which empties every night. This protects against small file deletions without having to go into a backup

Past those, there is some physical protection that comes into play. The server closet is locked and has a solid wood door with EZ-Armor door jamb reinforcement. This makes it very hard to break into the room that holds my data

Its on a UPS with very high sensitivity, so any brownouts, outages or surges should be fixed before it gets to the NAS, and I also have a surge protector in my electrical panel. I've also installed a brand new ground rod, and cut the phone lines and coax into the house, which should help reduce the chance of getting hit by lighting strikes that occur elsewhere and travel down the line

Data Backup

This is only going to cover what I am doing to back up my actual important data. This is documents and family photos mainly. I have less robust solutions in place for data that can be easily downloaded again

On Site / On Line

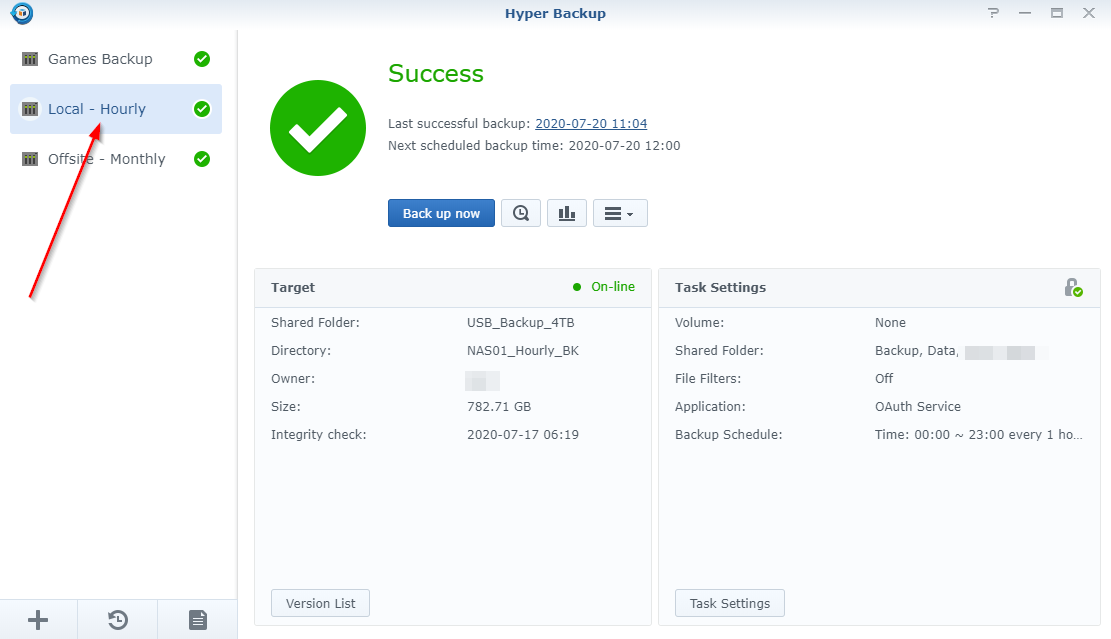

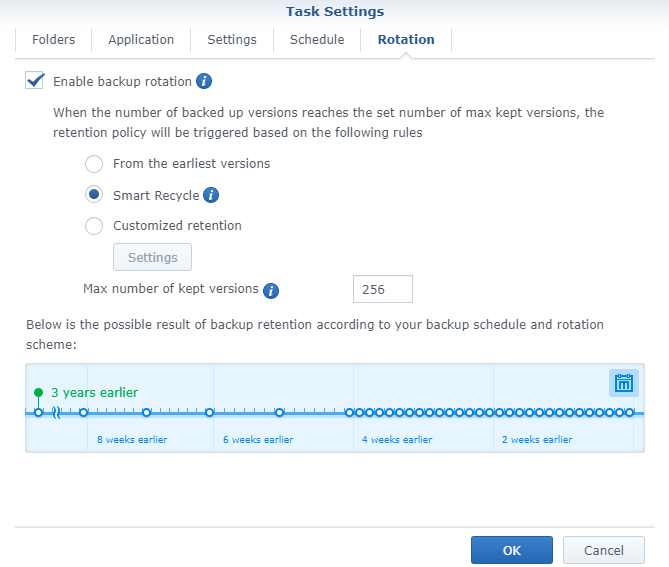

My first line of defense is an 4TB WD Easystore connected directly to my Synology NAS. From here I back up to it using HyperBackup. The drive has very strict permissions set, so only an admin can even get to the drive. This means any kind of ransomware or attack on the network shouldn't be able to access this drive to kill the backups. I take backups of my important data hourly, and keep it for around 3 years

As you can see, after the 256th backup takes place, the oldest is removed. This equals out to 3 years of retention

Off Site / On Line

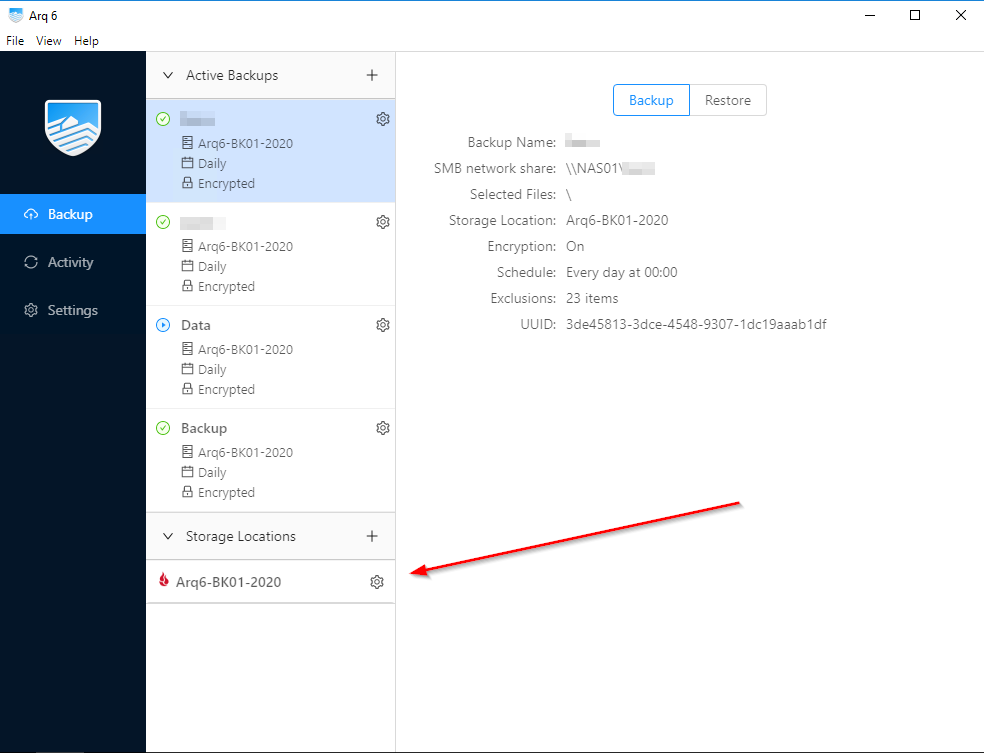

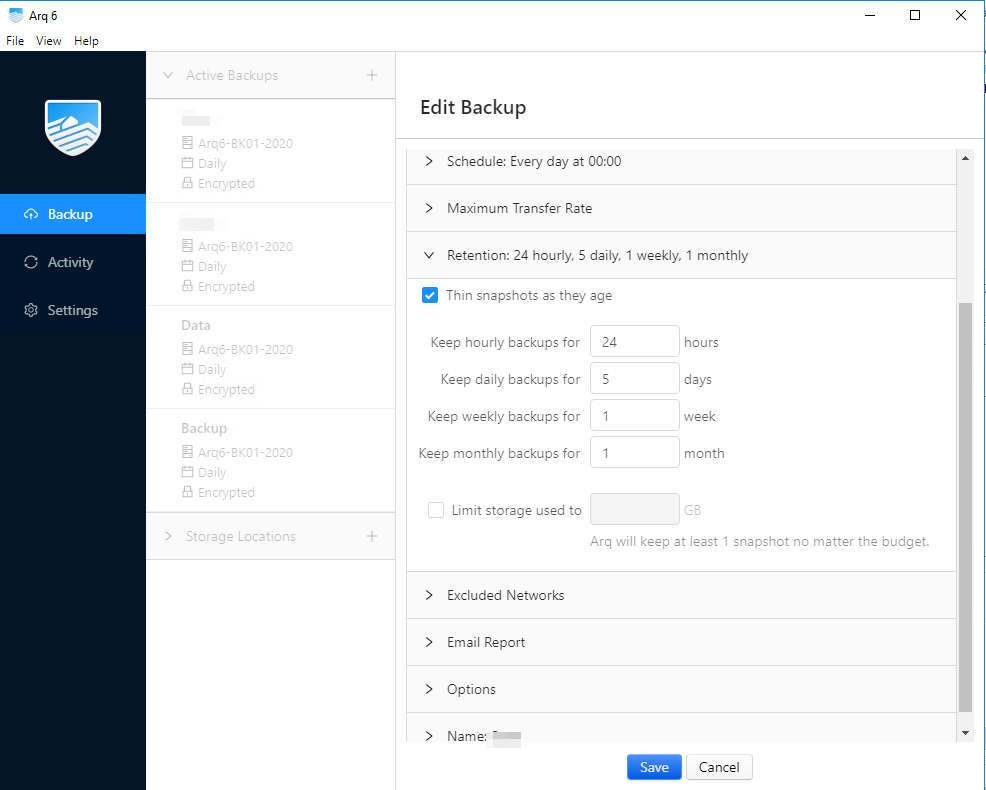

Here I am using Arq 6 which I now think is stable enough for me to use use. But I do have my Arq 5 working on another box until I am 100% sure. The reason I am using another applications is twofold, first if there is an application failure, one of my backups is still going strong. And second, HyperBackup cannot run two tasks at once. So if this runs for 5 hours backing up a lot of data, I miss 5 x hourly backup runs

I backup all the same data but this time to BackBlaze B2. The data is just stored for 1 month, as this is purely in place to recover from a local failure. Any historic backups would get restored from HyperBackup

This costs me a very small amount of money each month in storage costs from B2

Off Site / Off Line

My last resort is an off site, off line backup. This backup cannot be affected by ransomware at all, because its completely off line

I have a USB 3.1 Dock attached to my Synology NAS, and using that I can attach a hard drive which I keep in a protective case. Once the backups are complete, I move the drive(s) to an off-site location

I again use HyperBackup for this task, it works very well. I do have to manually kick off the task though

Long Term Archiving

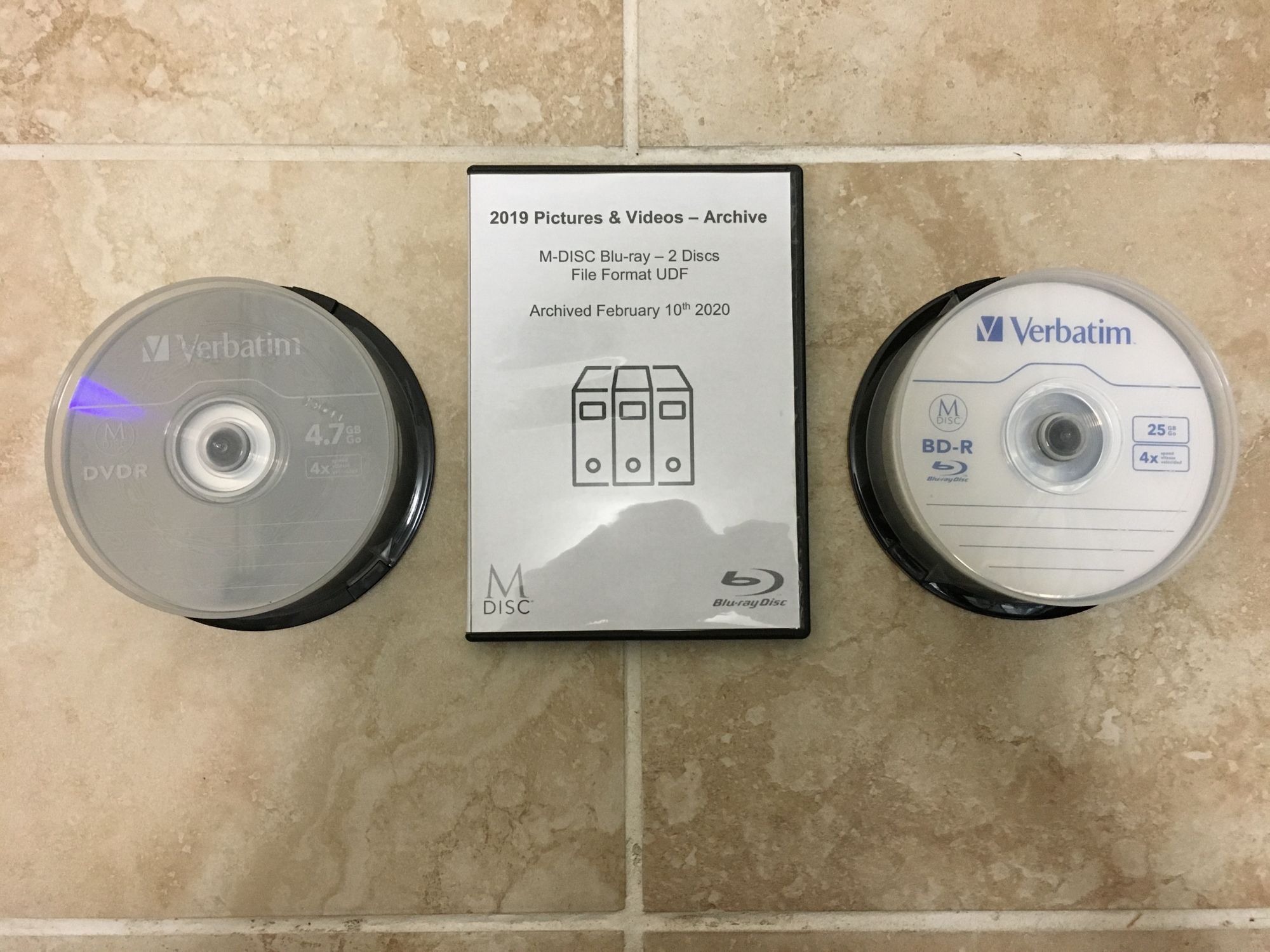

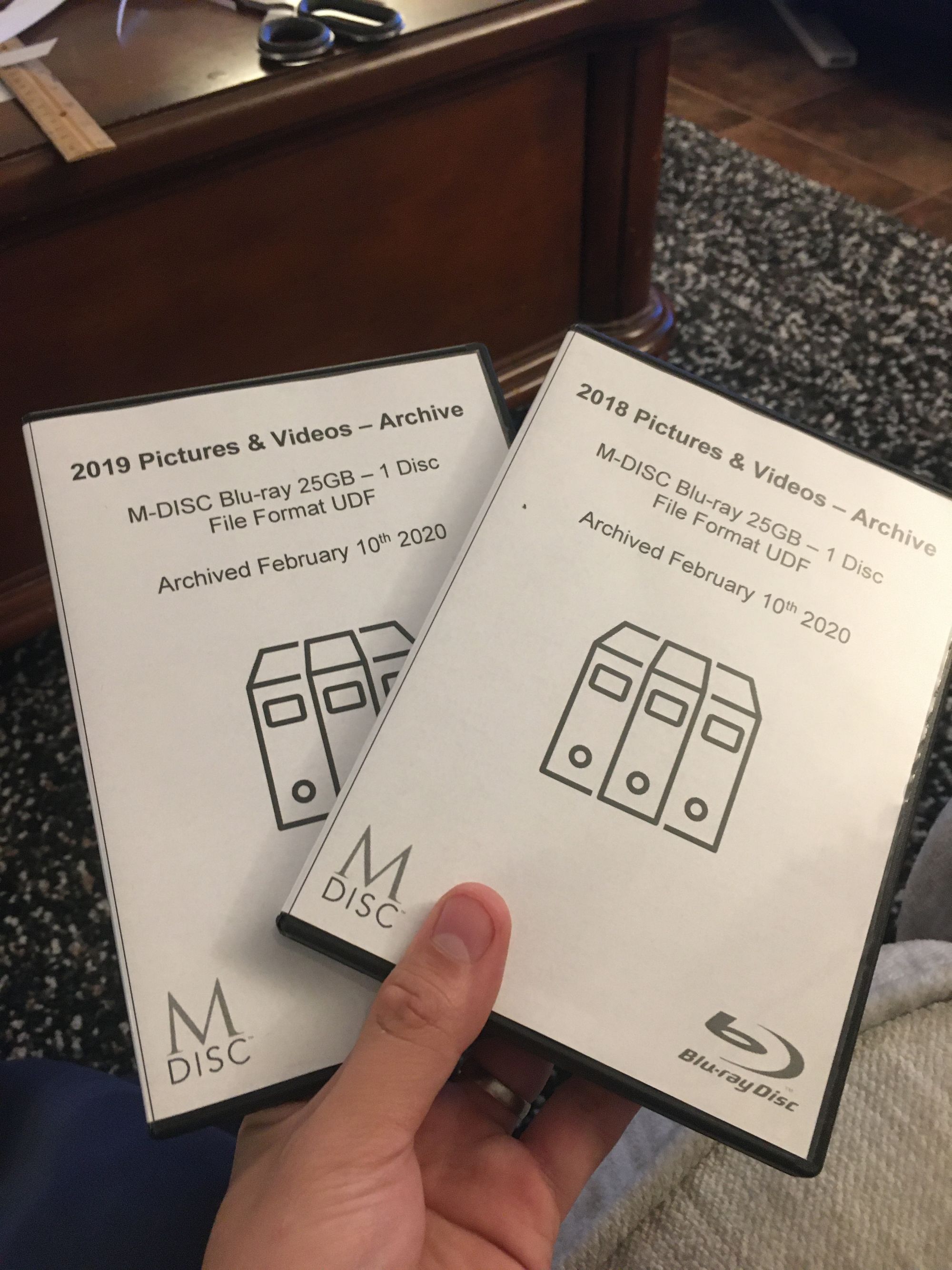

The last piece is long term archiving, and yearly backups. I keep these backups forever, and they are off line by nature. Currently these are stored in my home, but I am thinking of perhaps storing them off-site. I have articles on both of these separately, so I will be brief. If you would like more information, check out those other articles

First I backup my family pictures to M-DISC. Either a BluRay or a DVD depending on the amount of data. M-DISC's are rated to last 1000 years

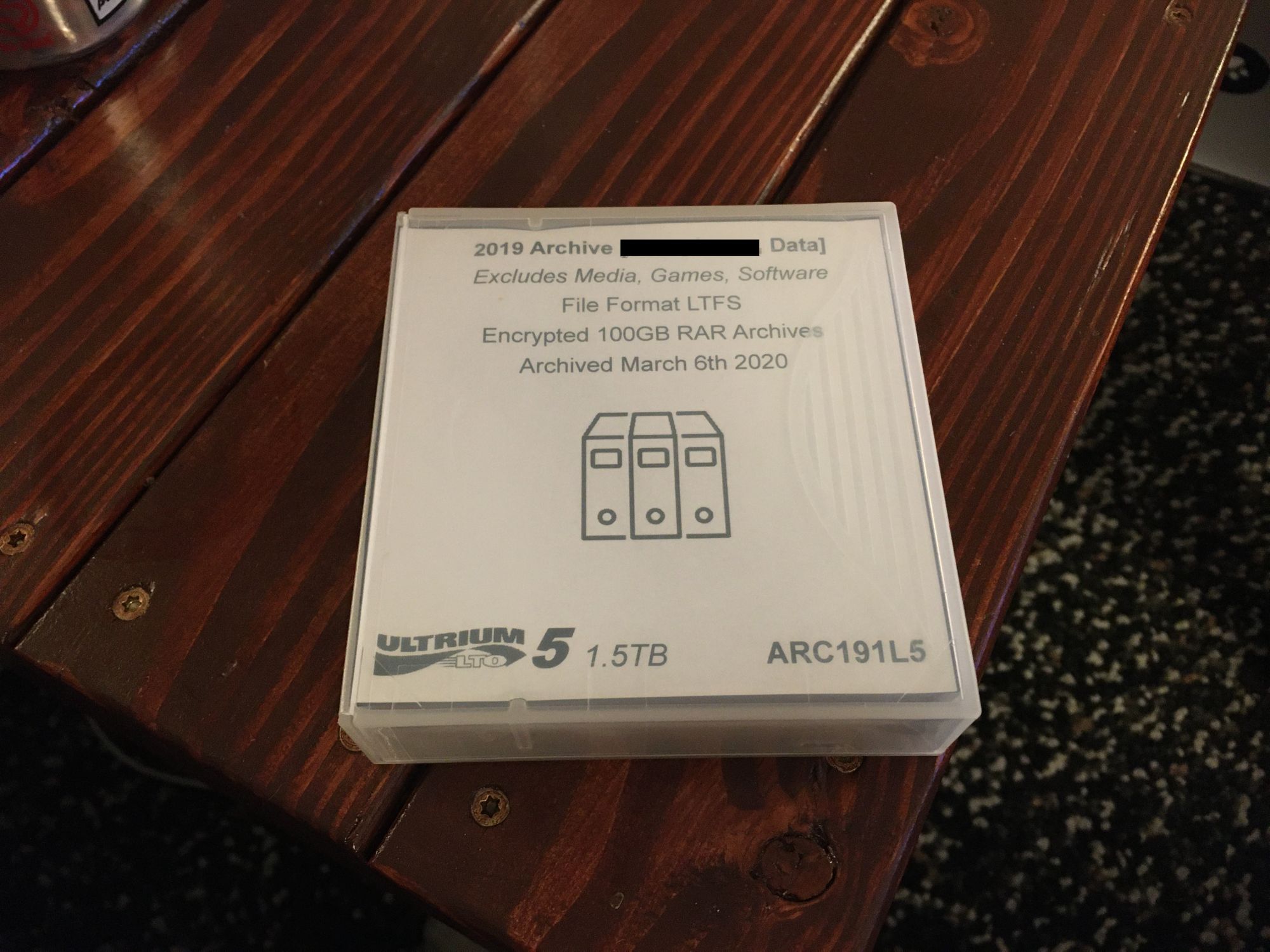

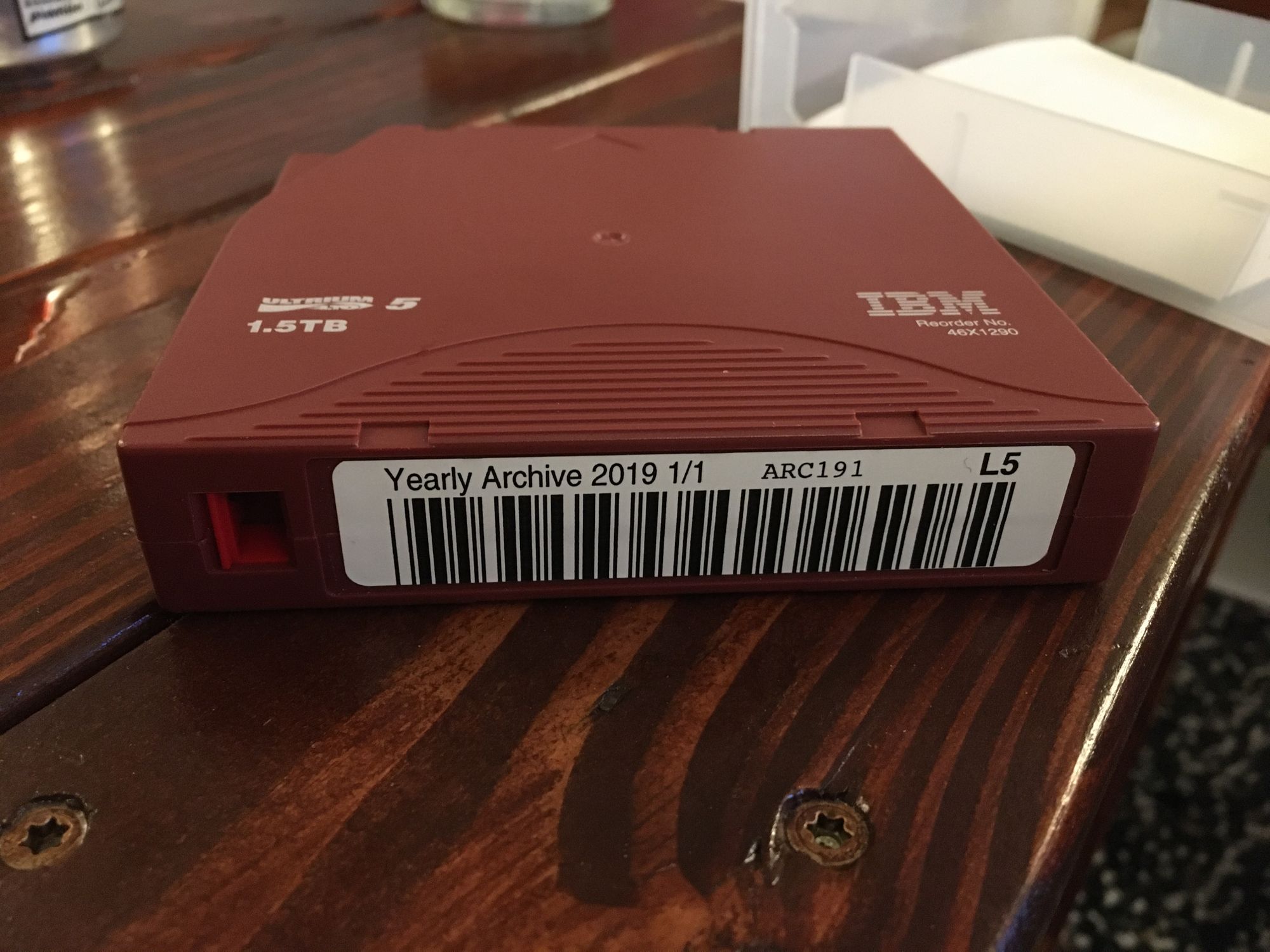

Second, I do a yearly backup to tape which includes more than just pictures

Final thoughts

If you read my older posts you will see that I had more stuff in place, with more copies of my data. Ultimately those never panned out, and what you see here is what works flawlessly, and has stuck. I am very confident that I can't lose my important data with what I have in place

Please leave any comments or contact me if you have additional questions