My Data Backup Plan - 2021

This post will detail how I am protecting my important data. The old post can be found here:

Since then, I have completely moved off of my Synology NAS which forced me to make some changes. Here are all the details.

This post highlights only how I am protecting my actual important data like documents and photos. Bulk media storage is not included in this.

Data Storage and Protection

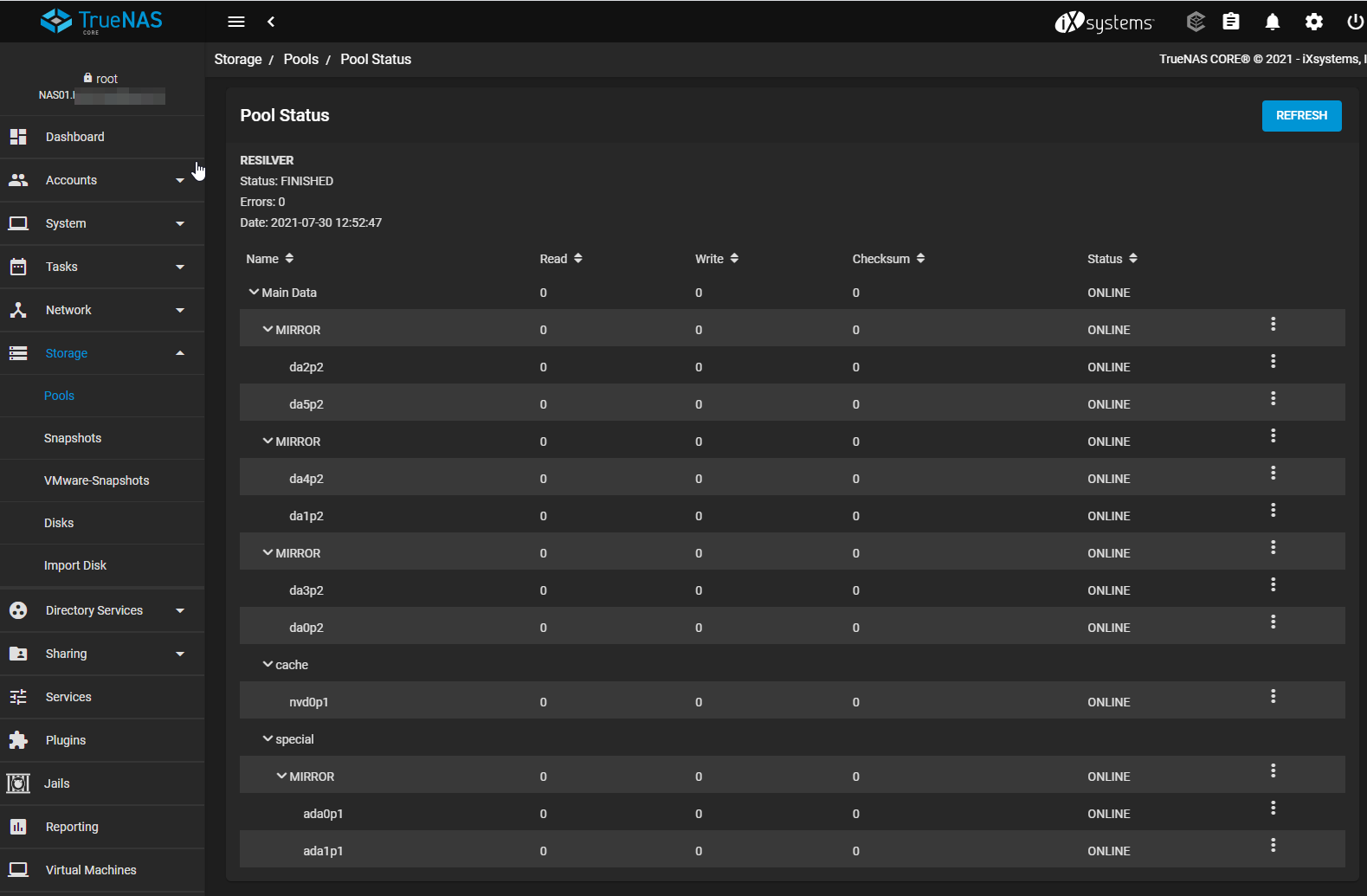

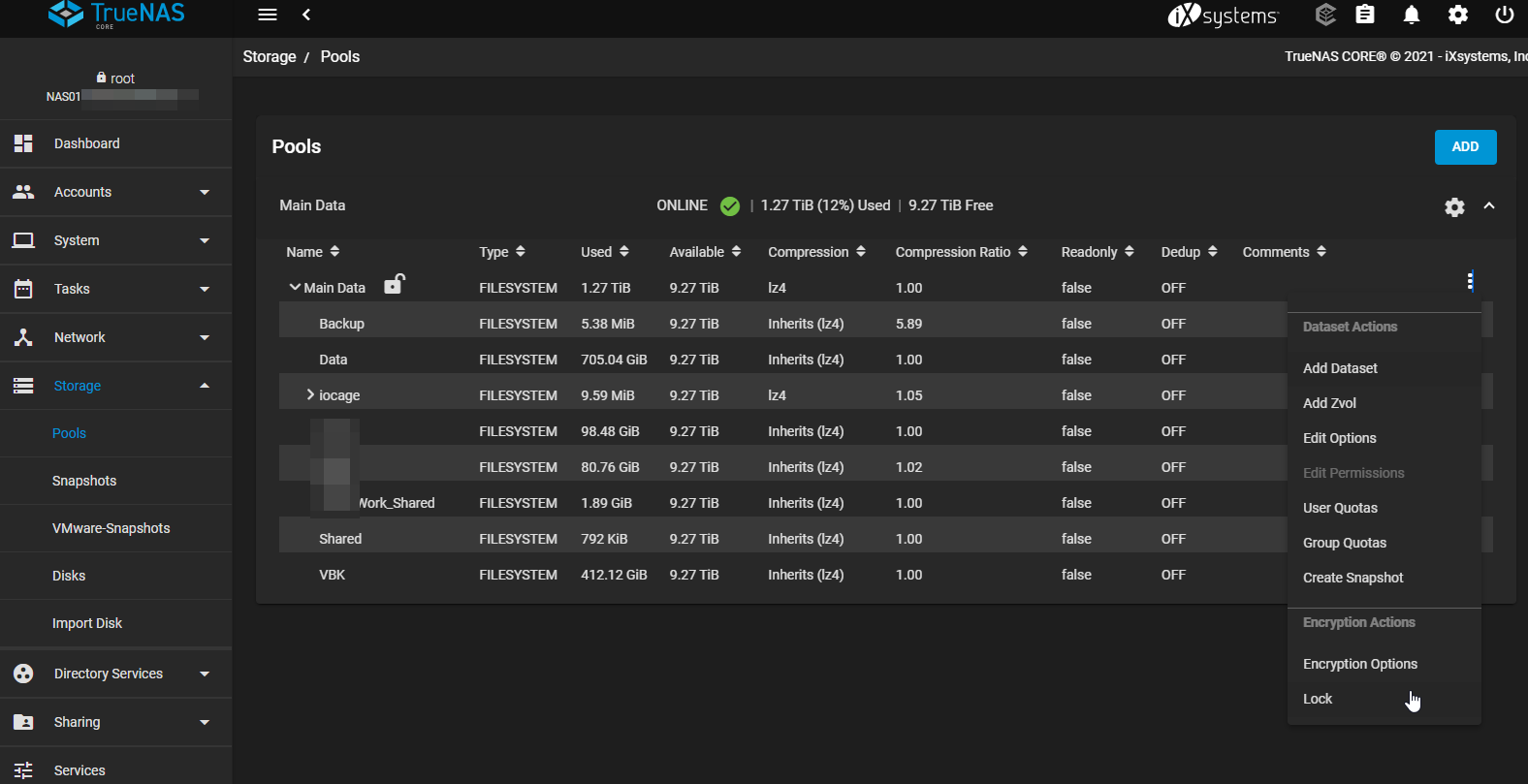

All of my Data is stored on a new TrueNAS box, which is using ZFS as the filesystem. Because of the check summing taking place, its almost impossible to get a corrupted file from Bitrot.

My main data array consists of 6 x 4TB hard drives (A mix of SATA and SAS) in three x mirrored VDEV's, and another mirrored VDEV for Metadata consisting of Intel Enterprise SSD's. This means I could withstand a max of 4 drive failures. They would have to be from seperate VDEV's though, if I lose both drives in a VDEV, all the data is gone. This is fine, as I have very good backups and replication. I could have gone with RAIDZ2 or RAIDZ3 for more protection, but I was looking good performance with this array, and capacity wasn't a concern.

This system has a dual port SFP28 NIC, connected with 2 x 10G SPF+ connections.

The system has redundant PSU's, and is backed up by a double conversion UPS

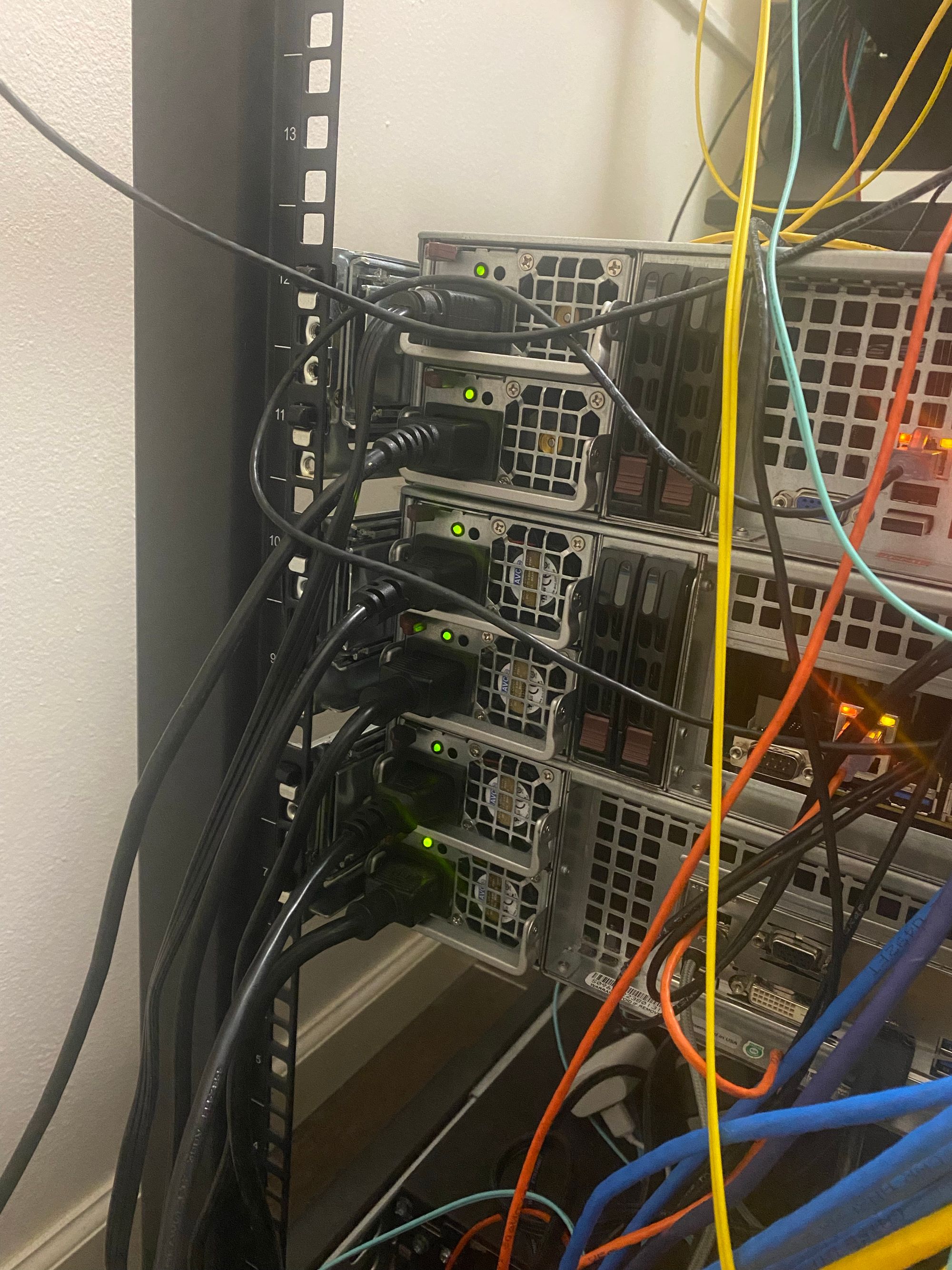

The middle system is the NAS.

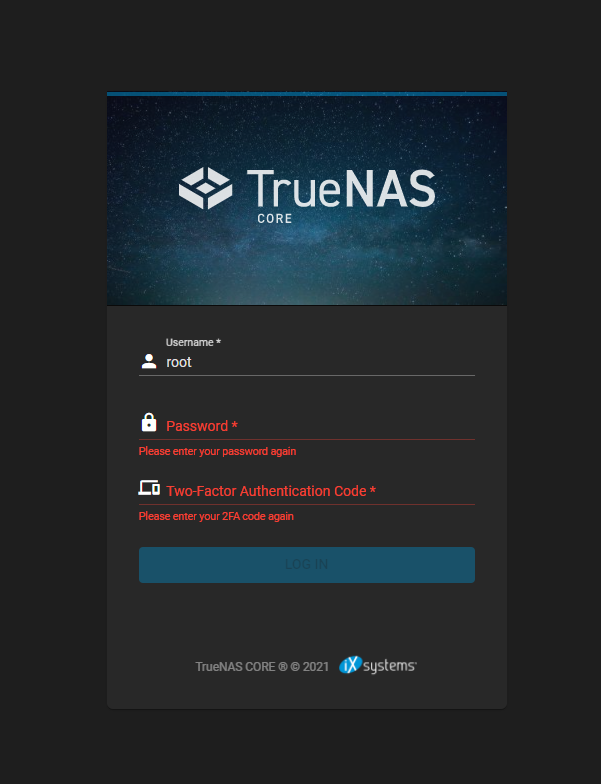

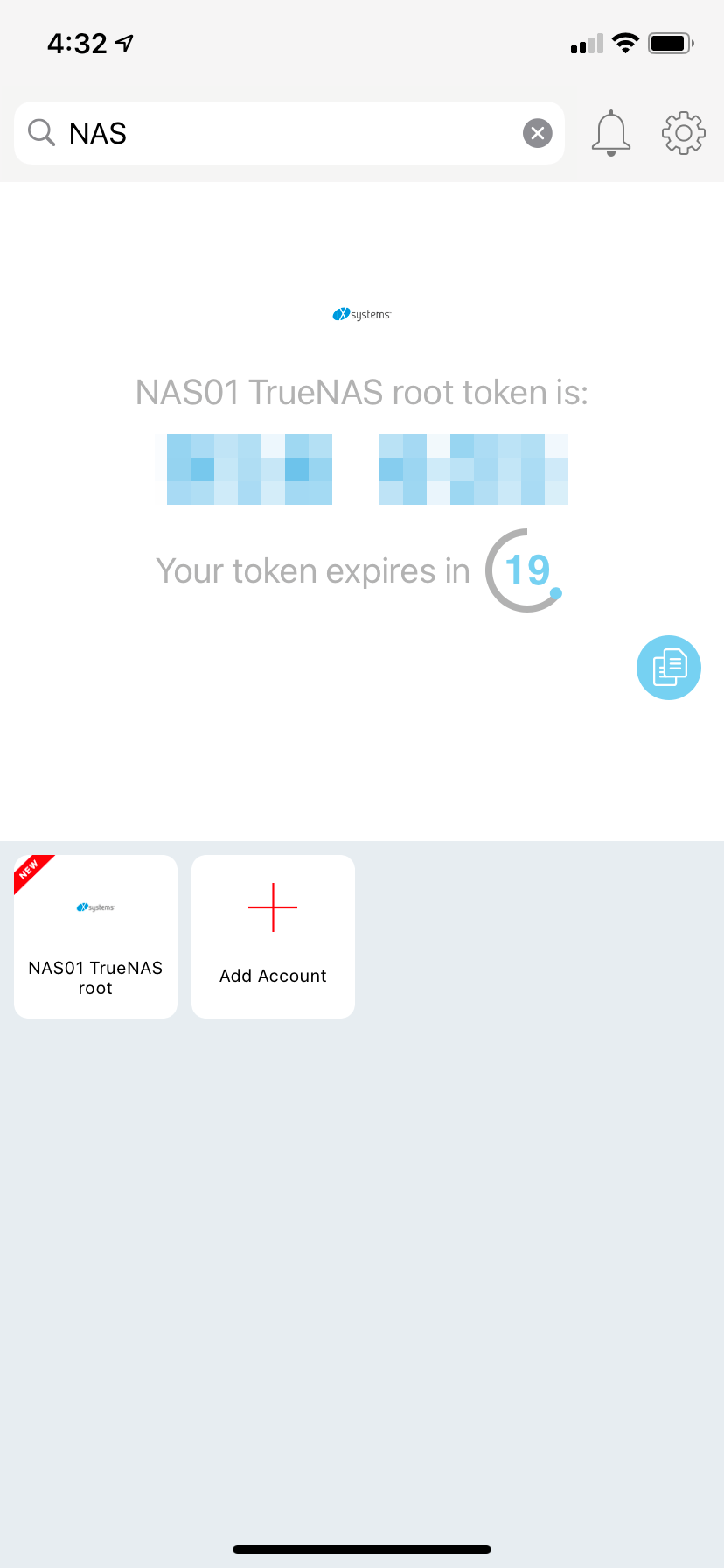

To log into the system you need the root password, as well a the 2FA code for added protection

The NAS is encrypted with a passphrase, meaning that when the NAS boots, I must manually log in and enter the passphrase to unlock the data. This means if someone steals the NAS, its impossible for them to get to the data

Snapshots

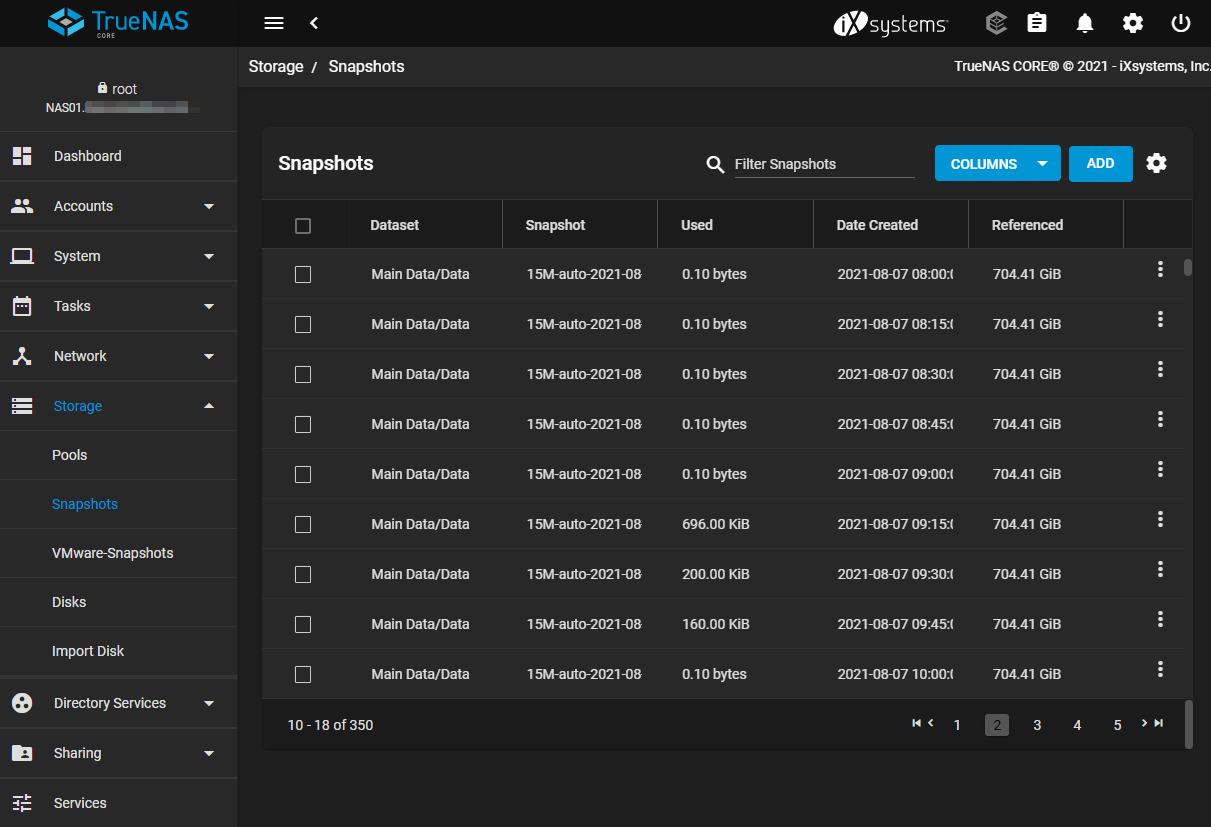

I have TrueNAS take ZFS snapshots every 15 minutes. Snapshots allow me to instantly roll back a file, folder, or the entire filesystem to a point in time

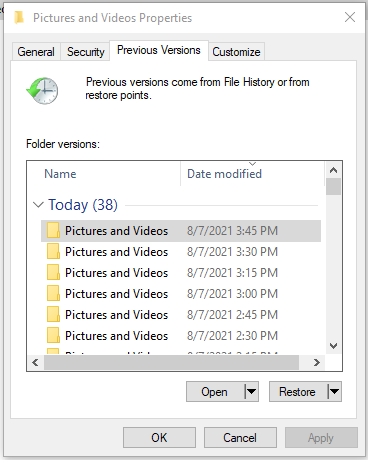

These snapshots show up in "Previous Versions" in Windows, allowing a very easy way to get files back.

NOTE: Synology does support this functionality with showing up in Windows. However, not if you use encryption! Huge deal breaker there.

Here is the snapshot schedule I landed on:

- A Snapshot every 15 minutes with 8 hours of retention

- A Snapshot every hour with 2 days of retention

- A Snapshot every day with 2 weeks of retention

- A Snapshot every week with 12 months of retention

This means if I delete a file, alter a file, or get hit with ransomware, I can easily go back in time to an earlier version of the file.

Snapshots don't consume any extra space other than retaining the removed files. So if I delete a 10GB file, I don't get that 10GB back until the snapshots expire. But they don't use extra storage space for new files.

Snapshots themselves are not a backup, as if the entire array fails, the snapshots are gone too. To turn these into "Backups" I'm doing replication

Replicated Snapshots / Local Backup

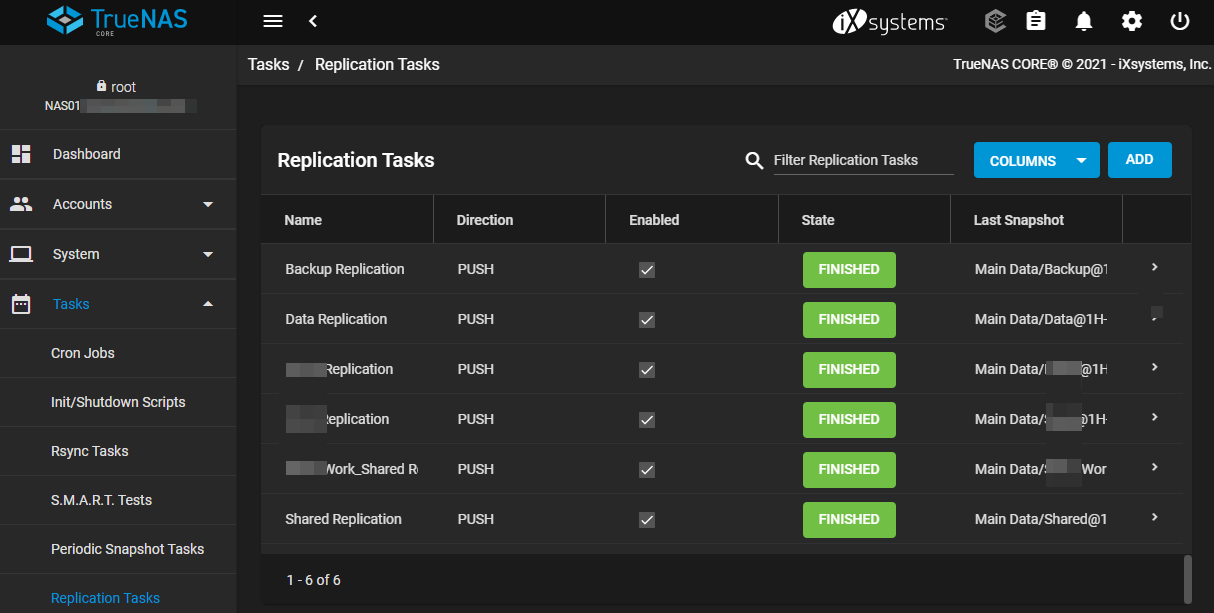

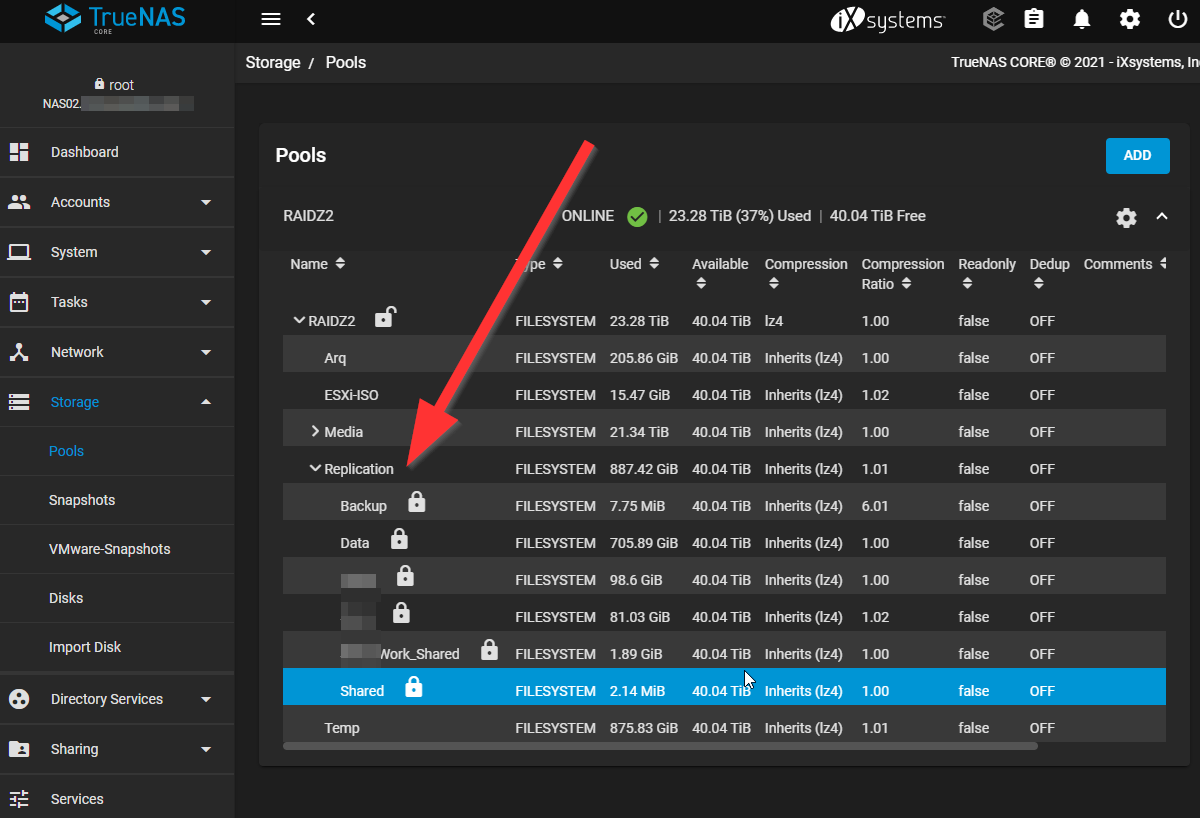

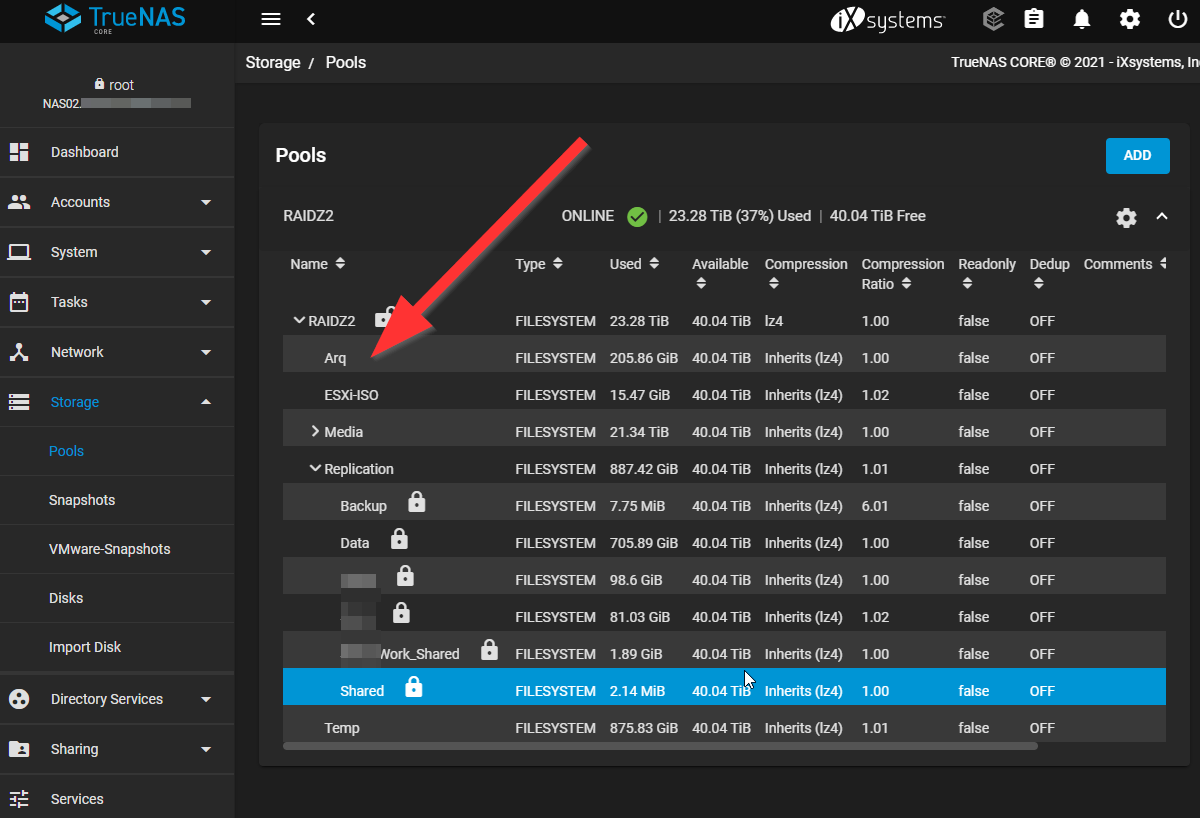

I replicate those snapshots over to a second NAS in my rack. This NAS is actually a VM in ESXi, but it has 12 x 8TB disks in RAIDZ2 for bulk media. But I am using a portion of that to replicate the data and all snapshots. The replication occurs after each snapshot. So the data on the second NAS is at the very oldest, 15 minutes out of date. This means even if my main NAS completely fails, I can still use all my data and I retail all past file history, as ALL snapshots are replicated.

Notice I said I can still use the data, because I have configured the SMB shares and all user accounts on this NAS as well as the first, I can simply unlock the datasets and actually go back to working with the data, by just connecting to the second NAS instead of the first. This is something a lot of people leave out of their backup plans. If you need to wait 8 hours to restore all your files, but you need them right now, you have a problem!

The replicated data must be unlocked manually with the passphrase from the main NAS.

This replication satisfies my plan for local backups, but I wanted to go a step further

Long term local backup

I am keeping the snapshots for a full year. But what if I want to restore a file from over a year ago? For that I am using Arq backup. You will see Arq again in this post, as I also use it for Cloud Backup. I am keeping Arq backups for 10 Years (But, its unlikely I'll ever reach this retention before I change something!)

Using Arq backup for local backups also means if there is a problem with the snapshots, I have something to fall back to. I have Arq backing up data from my main NAS, back to the second one.

You can see the dataset is just 200GB. This is because I have excluded MP3 and FLAC files from those backups. Those are not as important as the other media, and they never get changed.

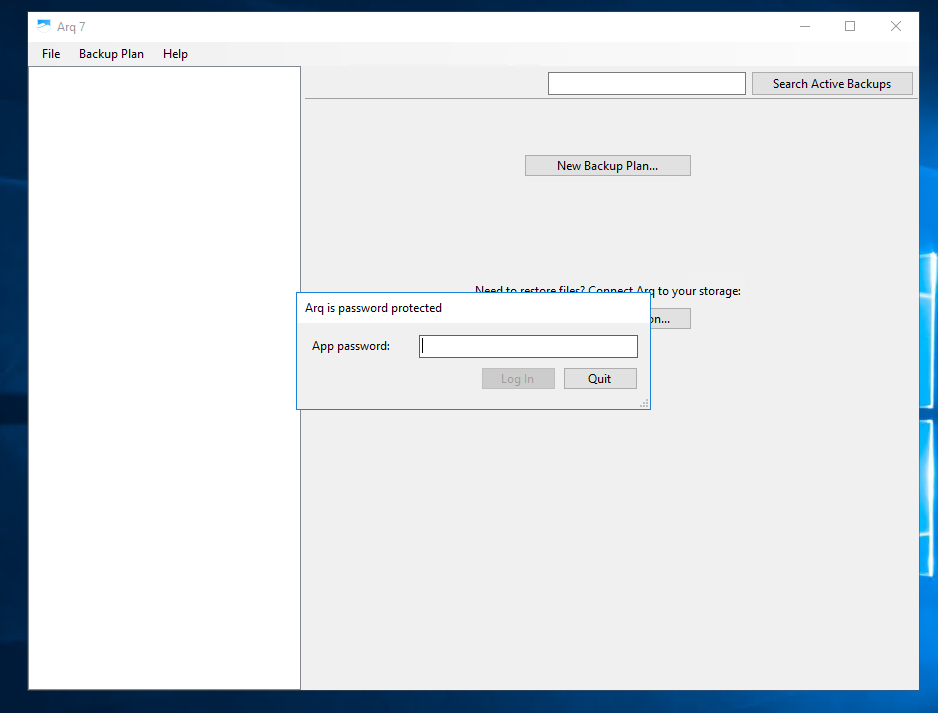

The backup software Arq is running in a Windows Server VM on my ESXi Server. Having it completely seperate from the NAS makes it easy to get to the software if the main NAS fails. And it also won't run in TrueNAS.

To even open Arq, you must enter the application password

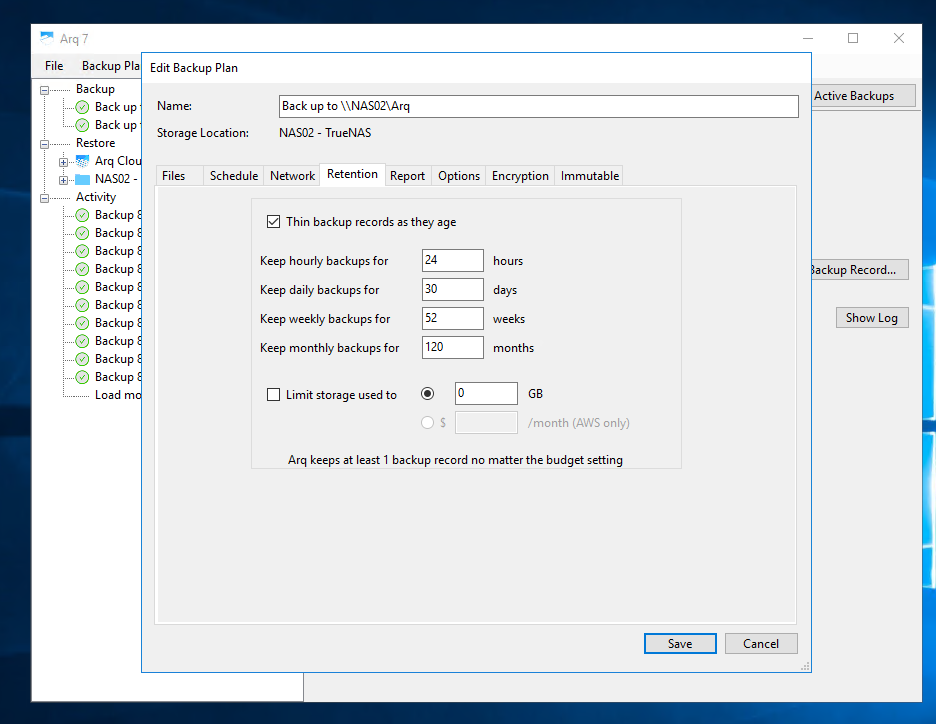

I do backups every day at 4am, and this is the retention schedule

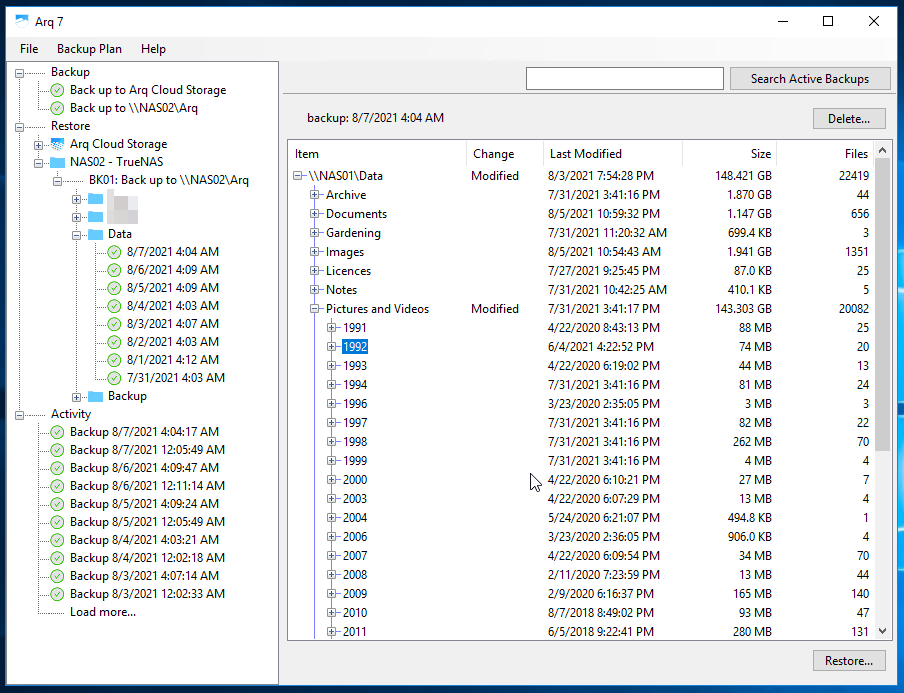

Arq has a good interface for browsing backups

Short Term Cloud Backup

I am also using Arq for Cloud backups. I pay for Arq Cloud which gives me access to the software and 1TB of Cloud Storage (Extra billed per GB)

For my main important files, this is just about the right amount of storage.

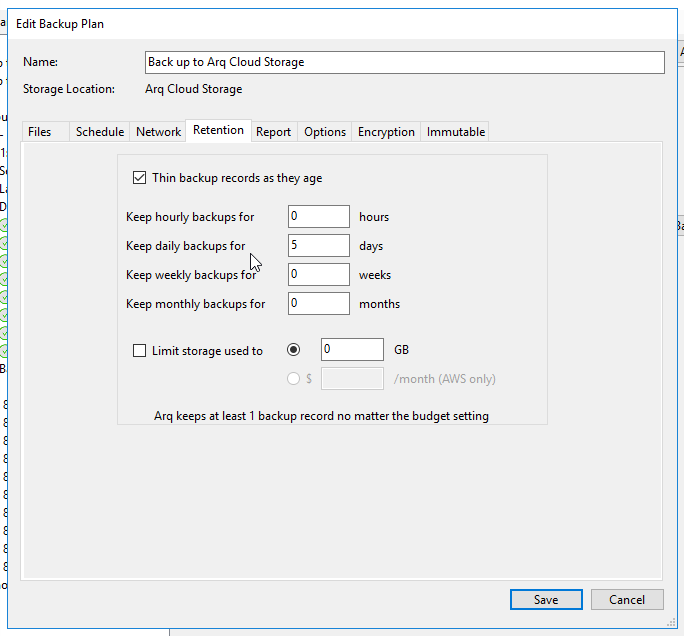

Because I am keeping such long history locally, if I need a restore a file thats where I will do it. For that reason I am only keeping 5 days of backups in the cloud, as if I am restoring these, I probably lost everything!

Long Time off site, offline backup

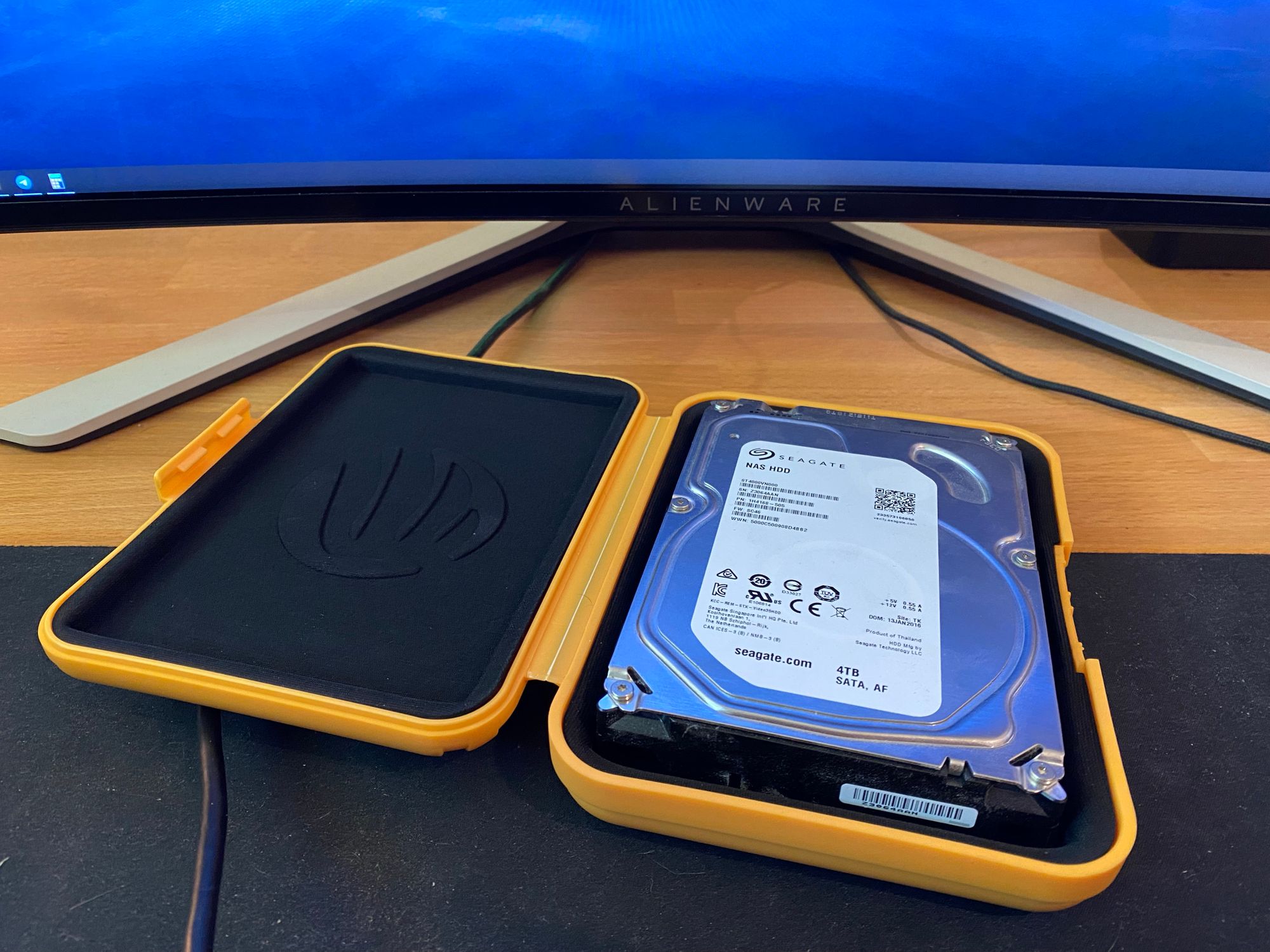

Just like before, I am also doing an off-site, offline backup which is on a 4TB SATA hard drive I keep in a case in my work drawer. I just started going back into the office, and my new office is on a very high floor of a building, meaning its unlikely to be destroyed in a flood.

I keep the SATA dock connected to my Desktop PC

to backup to this drive, I am using Borg backup. Its a very simple backup program. Every month I bring the drive back from work and do a backup, and take it back to work. The drive in encrypted with Bitlocker, but the backups are encrypted also.

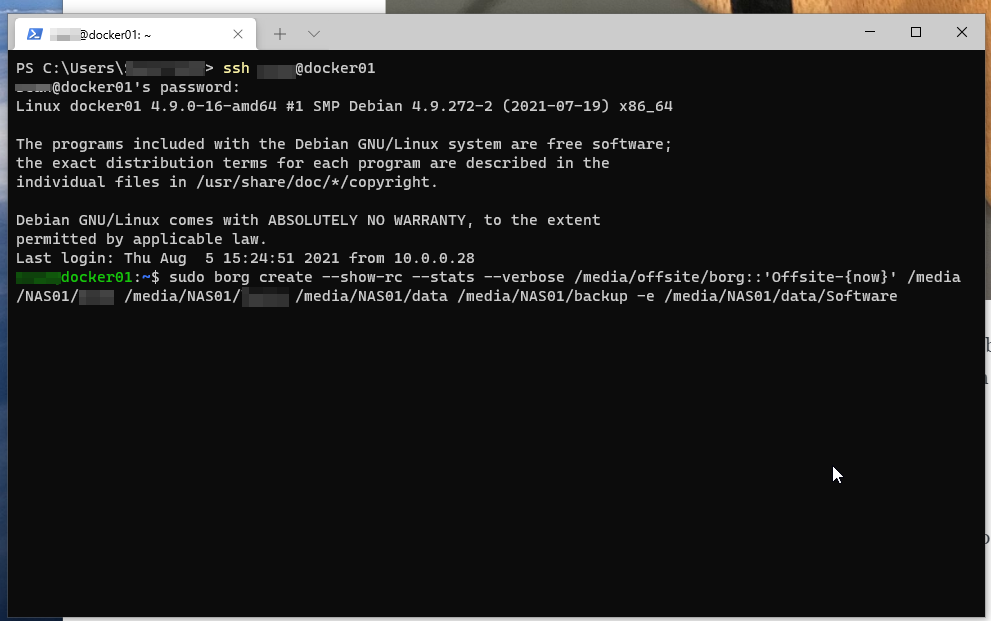

To use Borg, I mounted my shares to a Debian box, as well as sharing the docked drive. I just SSH to the box, and run the command to backup

I also mirror my Veeam backup folder to this drive.

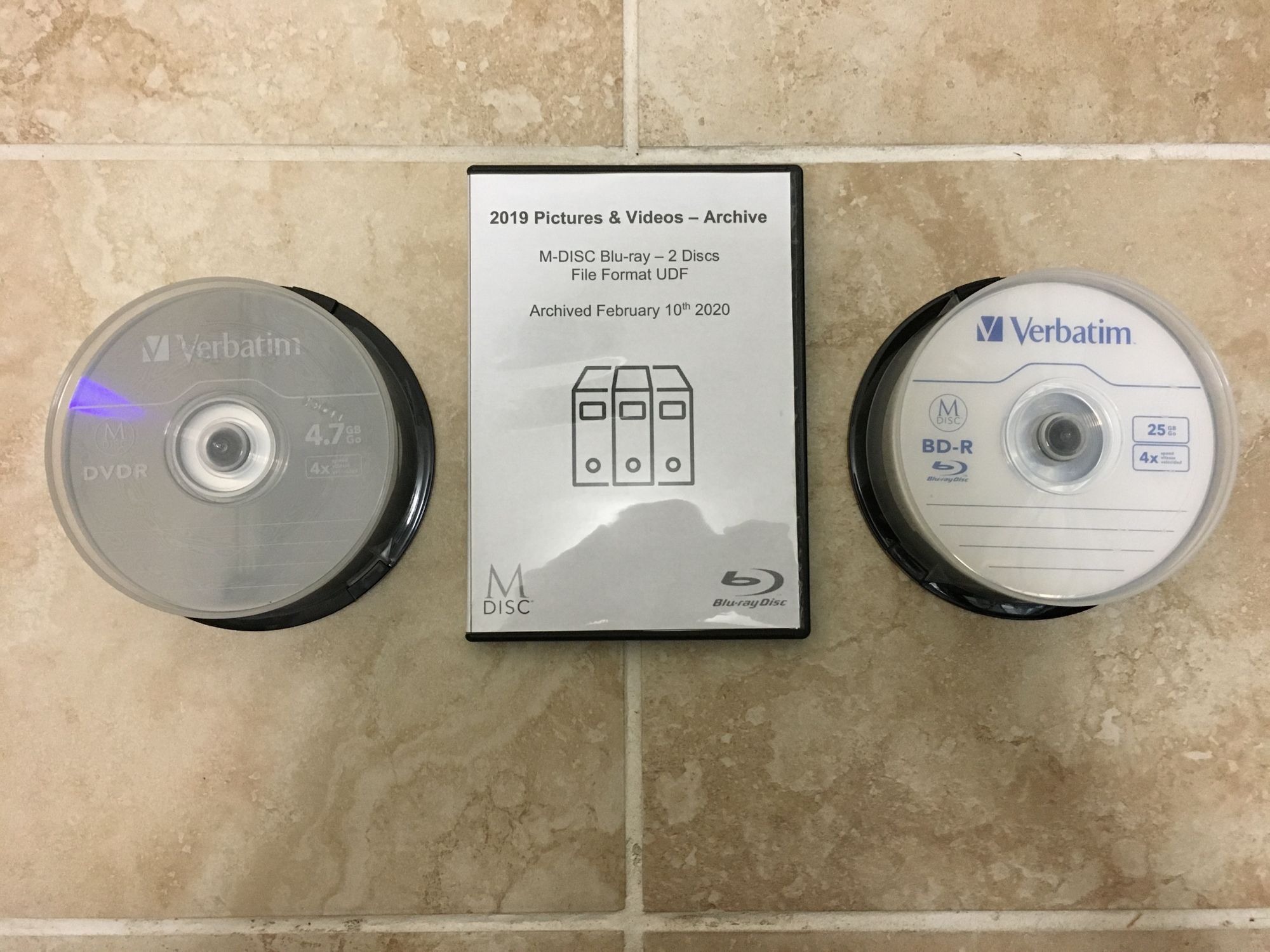

M-DISC Archive

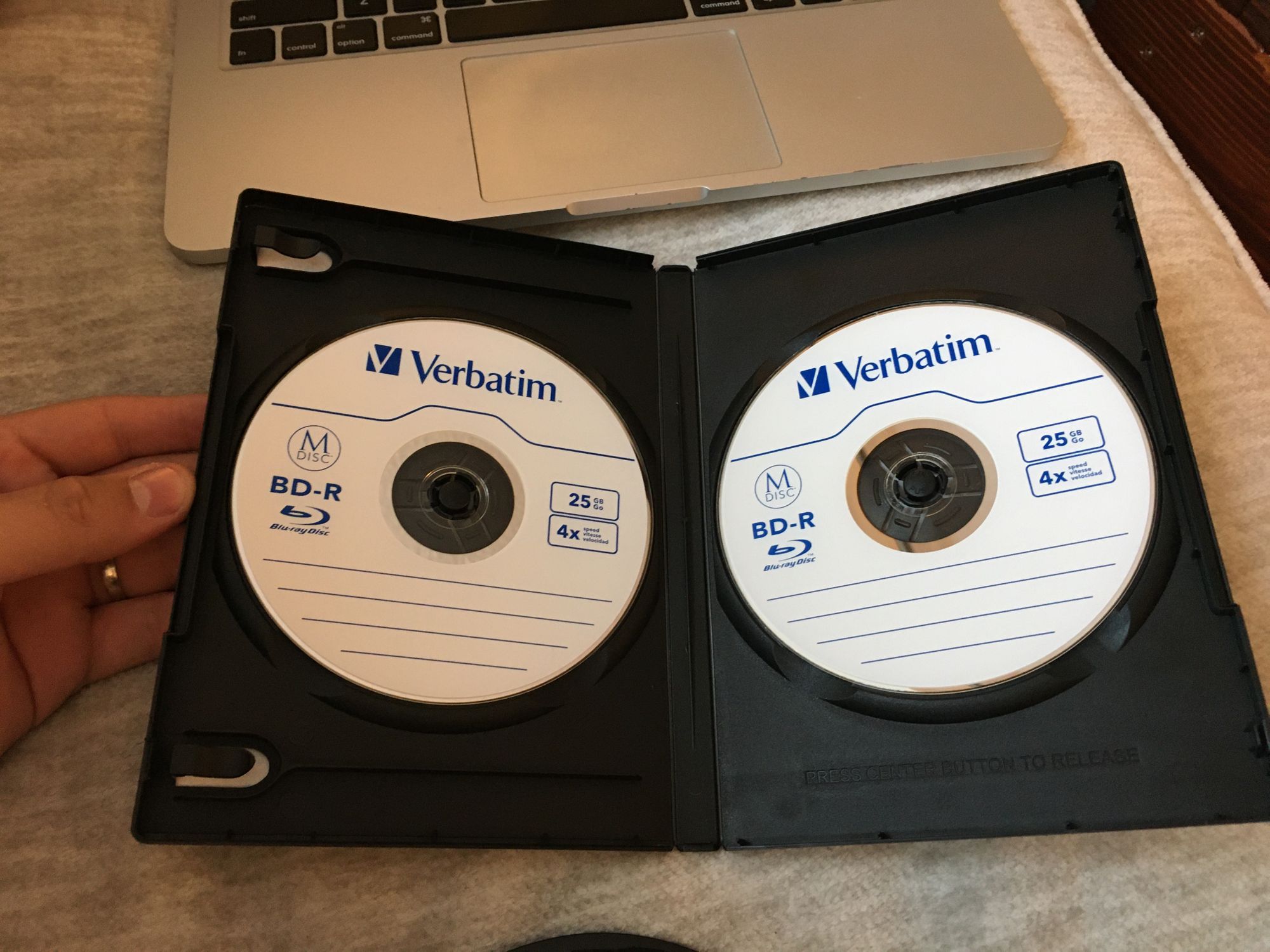

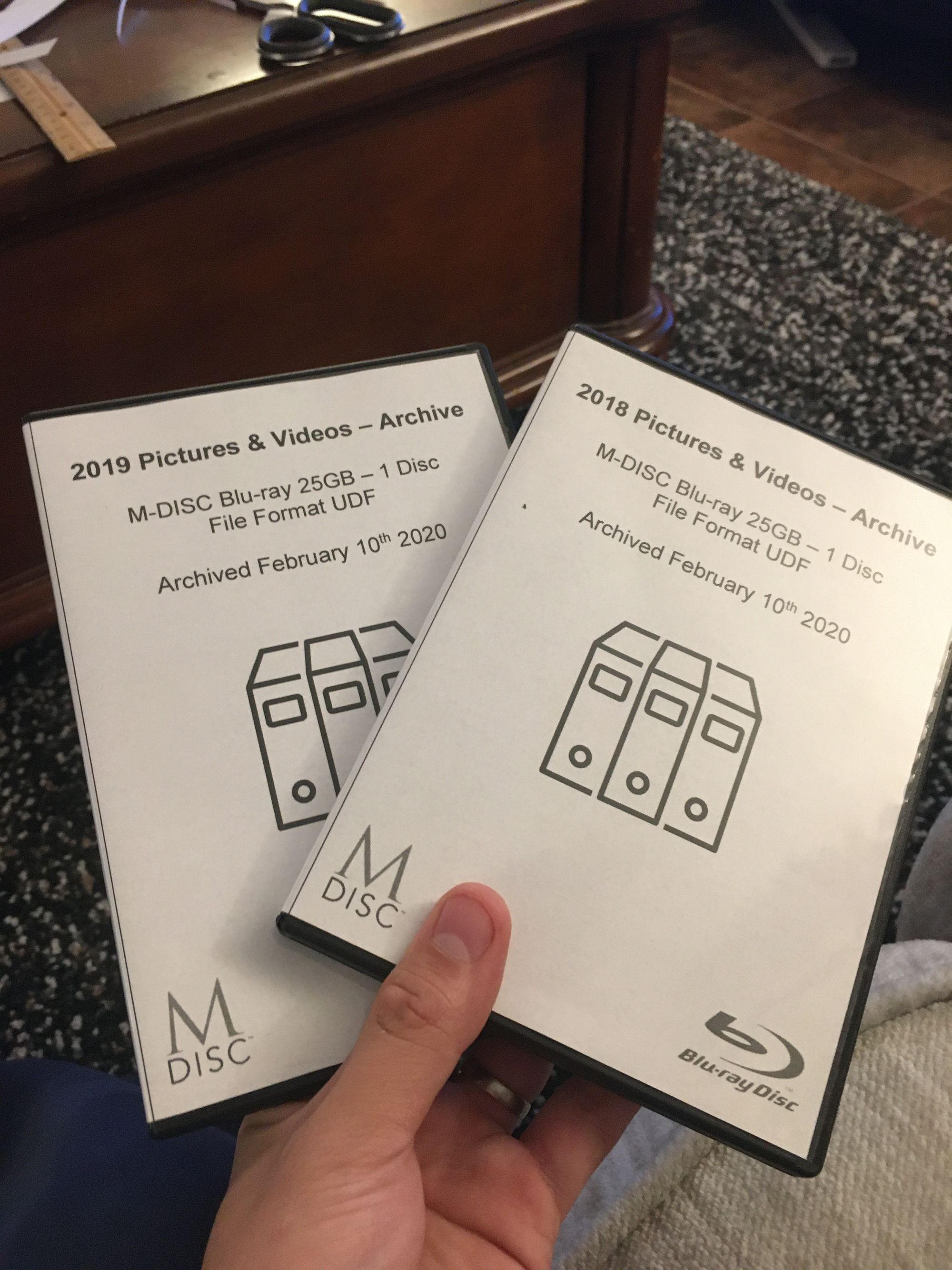

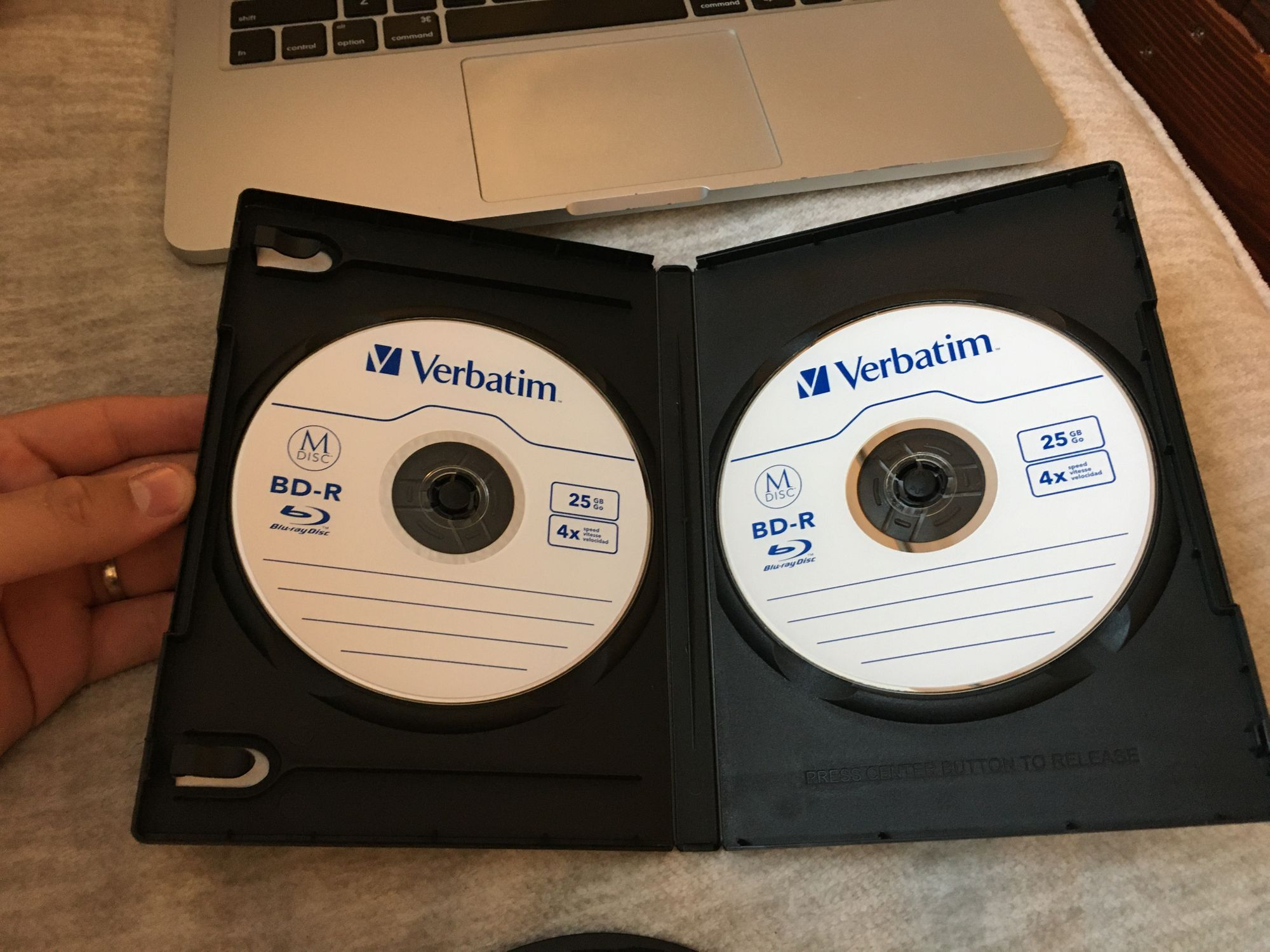

And as before, I am still archiving my Picture to M-DISC which have a shelf life of 1000 year

You can read more about this here:

Tape?

I ditched the tape library and sold it on. I realized that if I wanted to use it as a real backup, I would have to be prepared to buy another tape library if mine got destroyed. And I just wasn't prepared to do that when I could rely on much more common, cheap hardware to do the same thing.

If you have any questions or suggestions, please contact me!

Thanks for reading