Full Lab Details - September 2020

After posting the full lab history post, a lot of people wanted details on what I do with everything in my home server rack. Here are the full details. I have not cleaned it up for the pictures, so expect some dust and some work in progress

If you have any questions, I would love to hear them so I can add them in to the Q&A Section at the bottom

I am going to try break this up into seconds to make it easier to see

Network & IoT

The top rows are keystone patch panels and a cable management device. Here I have patches that go out to the rest of the house, the garage, cameras etc. As you can tell, this is in progress as I have 8 more to terminate that are currently just hanging. There is also some LC connections here that go to my office, and my garage for 10G connectivity, they are backed with OM3 fiber

The cables are Monoprice Slimrun Cat6a cables, they are very thin and excellent. I have had no issues even with PoE loads

Below that you can see my Supermicro 1u PFSENSE firewall. This has a Supermicro X11SCL-LN4F motherboard that has 4 x Intel 1Gb NIC's. The CPU is a nice new lower power chip which still has lots of power, the Intel Pentium Gold G5500. This handles my 1G/1G fiber connection without breaking a sweat. I also have 2 x 4GB Unbuffered ECC DDR4 sticks of RAM in there, I went with 8GB as doing things like IPS and packet inspection can increase usage a little bit

More details HERE

The firewall handles all routing on the network, DHCP, DNS and VPN tunnels for remote access, as well as my PIA VPN

When you see the images further down behind the rack, you will notice I have AT&T fiber, and their gateway is just thrown behind the rack with just one cable into it. This is because I am completely bypassing it with pfatt. My ONT connects DIRECTLY into pfSense so I have nothing between me and the internet. The reason this is needed is because AT&T always does NAT on the gateway even in "passthrough" mode. It has a very small table, and will cause performance issues

Below that is my main switch, a Dell X1052P. I am currently looking to replace this switch with a Cisco IOS based switch so I can get the good CLI. This switch is great on paper, it looks nice, it has 4 x SFP+ for 10G, its quiet, its nice and new so it uses less power. But this switch has virtually no CLI config, you must use the GUI which is absolutely horrible and slow. Because its the P model, it does PoE+. I am using PoE for quite a few cameras, AP's and other devices. I am using the SFP+ ports for an uplink to my garage lab, my desktop PC, my ESXi server and my Synology NAS.

Below that is just another cable management device

And finally we have a tray which has some devices on it, My Hubitat hub, an old Hive hub which is no longer used, and a Ripe Atlas Probe. The probe helps the Ripe NCC do measurements of latency, and for hosting the probe you get credits you can use to do you own measurements

Storage and Compute

Next up we have my Synology DS1817+, which also has a Mellanox ConnectX2 in it which gives me 10G connectivity. It currently has a mix of drives in a SHR2 array which gives me about 46TB of capacity. There are no servers on the NAS like PLEX or anything else, all of that happens on my ESXi server which we will get to. Next to the NAS is a small 8TB Easystore drive which I use for local backups of import files

Below is my Blue Iris server. It has an Intel i5 9400 CPU which is 6 core. It has 8GB of RAM, and around 16TB of storage from random old drives. This holds all my recordings from cameras. The server also has a quad port Intel NIC which lets me have the server connect to both the regular VLAN for general use, and a protected camera VLAN

More details HERE

Below that is my ESXi server. There is a lot to go over here, so I may skip over some things. If you have any more questions, please email me.

It has 256GB of Registered ECC DDR4 RAM, 2 x E5 2680 V4 CPU's which are 14 cores with hyperthreading giving them 28 threads each. This means in my ESXi server I have 28 cores and 56 threads to play with. This is WAY more CPU power than I need, however I had to get the second CPU so that I had all of the PCIe slots in the server available. With just one CPU, half are disabled! This server has no drives in the front right now, but I might add some later. In the back there is 2 x 2.5" bays which have 2 x 800GB Intel DC S3700 SSD's for scratch usage for my tape library, and there is a Micron Enterprise 9100 PRO 3.4TB NVMe SSD in the server for VM storage. Its a single SSD, but it uses RAIN (Similar to RAID) so it can have redundant flash chips

It runs ESXi 6.7, as I have not gotten around to verifying if all of my systems will play nice with ESXi 7.0 (Such as Veeam etc). On there I have vCenter, a few docker hosts (Where PLEX lives, as well as other things like LibreNMS etc), a few Windows VM's for Veeam and a domain controller, and some VDI machines I access over RDP. The VM's on here change all the time, because I am always playing with new things

Below that is my tape library, I use this to archive data as well as backup large files. It has 2 x LTO5 drives which can bet written to at the same time. LTO5 tapes are pretty old, and hold 1.5TB of data each. Its not much, but they are cheap! This connects to the ESXi server with 2 x SAS connectors. In the ESXi box I have an HBA that is passed through to a Windows box where I use IBM's LTFS software, along with Veeam.

More details HERE

The Synology NAS also has a a USB 3.0 SATA dock plugged into it, I use this for off-site backups. You can read more about my backup plan HERE

Power

My power needs some organization as I have made some changes. The top two devices are PDU's, they are just fancy power strips that connect to the UPS to give you more outlets, and they are metered which is nice. I have two because I used to have 2 x UPS's, and I was too lazy to remove the other PDU...

Below that is an SMX1500RM2U UPS with a network card. This model allows for extended batteries to be connected too (I don't have)

The power continues around the back where I have my 2 x 20a circuits, a 30a circuit and a bunch of power inlets. You can read all about this HERE

The extra UPS's are for the power inlets, just temporarily until my much larger UPS arrives

Infrastructure & Climate

Here you can see where I come down the wall into the closet for networking. I have OM3 fiber in the orange tube, and a big mess of Cat5e and Cat6, and Cat6a depending on where its going. One of the OM3 runs goes all the way out to my detached garage. You can see here the AT&T Fiber ONT as well

Here you can see a Watchdog 15P enviromental probe I have on the wall in front of the rack. It does a great job and monitoring, logging and alerting about Temperature and Humidity. I find this device much more reliable than a Z-Wave sensor. More details HERE

Bonus Lab, Garage

As a bonus, I have another rack in the garage. It needs some cleaning and I don't have many great pictures of it currently, but you can read the full writeup HERE

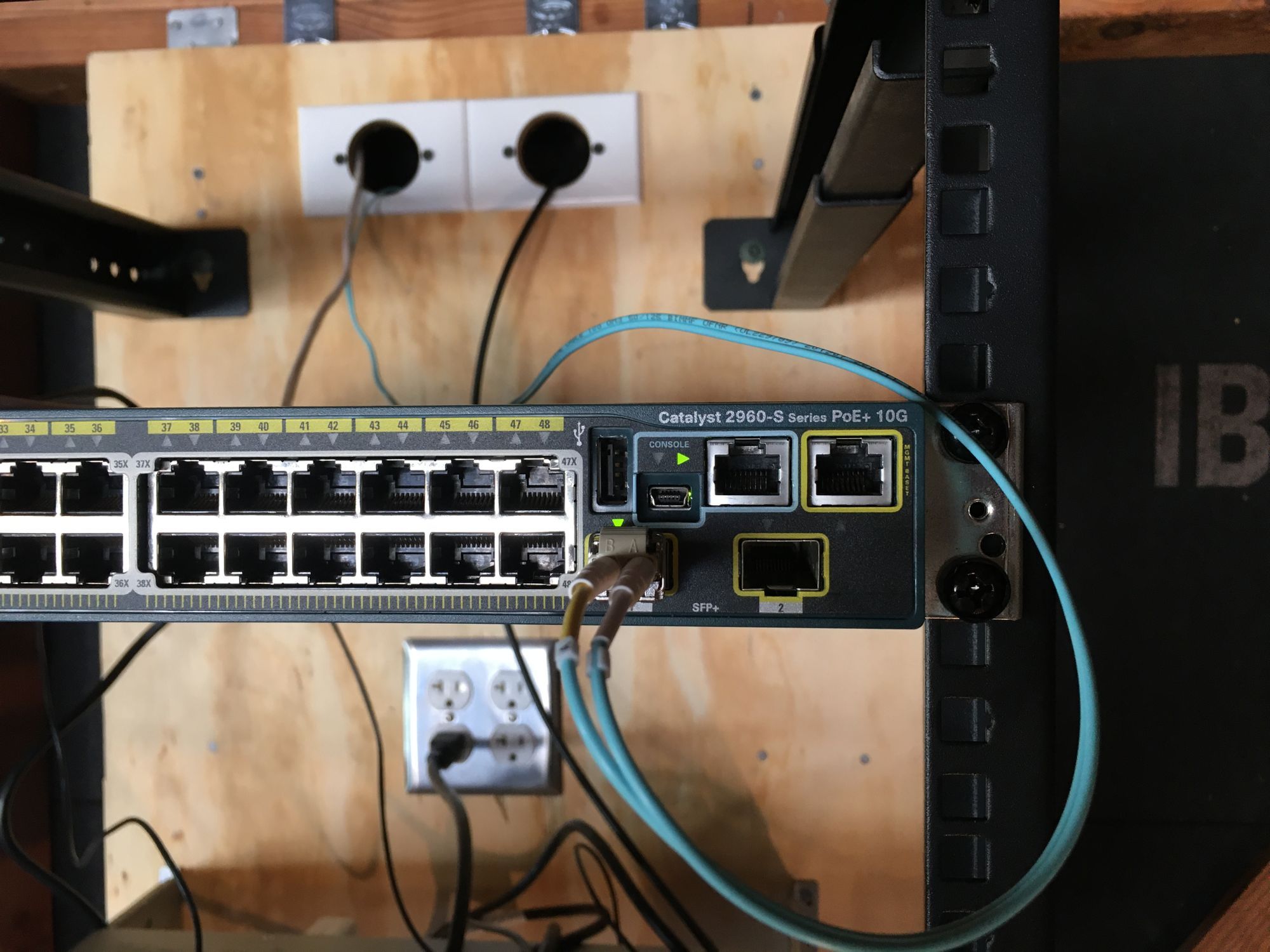

This rack has an APC SMT1000RM2U UPS, a Cisco 2960-S Switch which is uplinked with 10G fiber, a Small Lenovo M93 which has ESXi on it, and a Raspberry Pi for flight tracking. The ESXi server just has some small testing VM's I use. This rack also has a dedicated 20a circuit from the sub panel that happens to be very close to it.

Even though its very hot here, I have never had an issue with it. Everything just keeps on chuggling along.

There is some cameras and AP's connected to this switch also

Q&A

Currently there is no questions here, please email questions to blog[at]networkprofile.org with the question, and let me know what name you want